Table of contents

Customer health score

Founders don't lose revenue because a dashboard said "churn risk." They lose revenue because the warning came too late, or because the team didn't agree what "at risk" meant. A customer health score is valuable when it creates early, shared clarity: which accounts need attention, why, and what to do next.

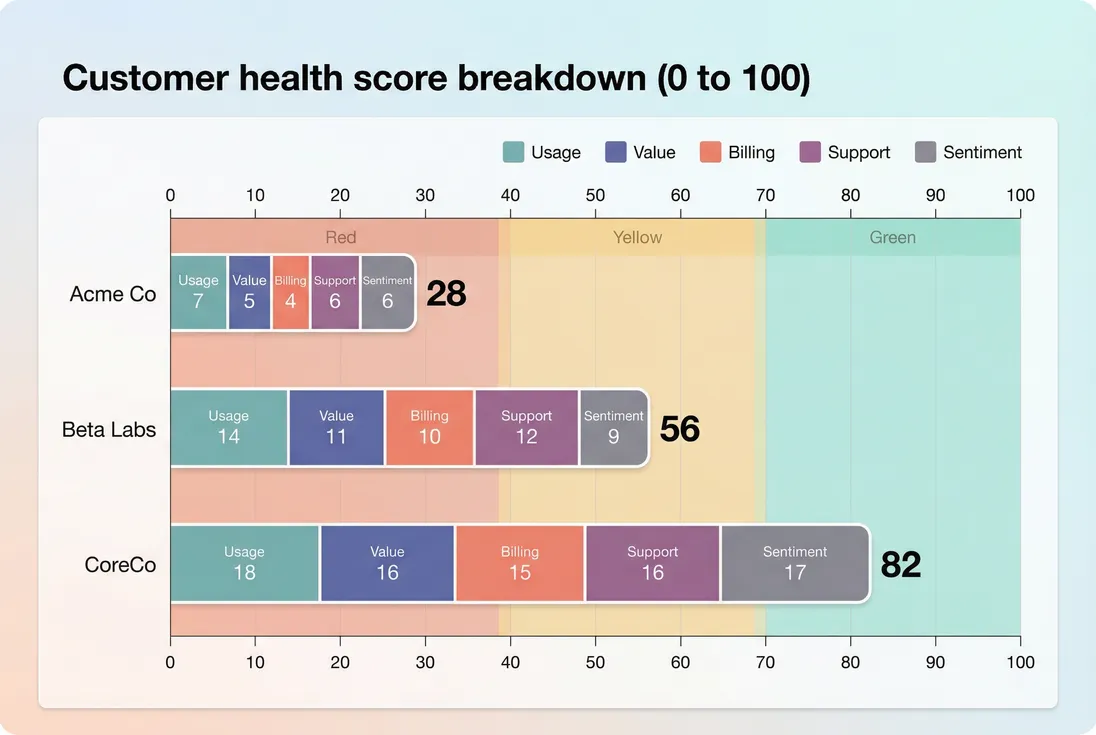

A customer health score is a single, repeatable number (often 0 to 100) that summarizes how likely an account is to renew and expand, based on leading signals like usage, value adoption, billing risk, support friction, and relationship sentiment.

What this metric reveals early

A good health score is not a vanity KPI. It is an operational signal that helps you:

- Prioritize attention across Customer Success, Sales, and Support when you have more accounts than time

- Forecast churn risk before it shows up in lagging metrics like Logo Churn or MRR Churn Rate

- Protect expansion by identifying accounts that look "active" but are not realizing value

- Catch preventable revenue loss, especially billing failures and unresolved friction

Where this gets real is in the gap between "retention reporting" and "retention control." Metrics like NRR (Net Revenue Retention) tell you what happened. Health score is meant to influence what happens next.

The Founder's perspective

If your weekly exec meeting includes churn surprises, your health score is either missing key inputs (billing, stakeholders, value events) or it is not tied to clear actions. The score is only as good as the decisions it drives.

What goes into a useful score

A health score is a composite. The mistake is thinking the composite is the product. The product is the shared definition of health that matches how customers actually succeed (and fail) with your SaaS.

Here are the five input categories that work across most SaaS businesses.

Usage and adoption signals

Usage is the most common input because it is often the earliest sign of drift. But "usage" should reflect your product's value path, not raw activity.

Good usage inputs:

- Activation milestones (onboarding completion, first key project created)

- Breadth (how many teams, workspaces, or departments adopted)

- Depth (frequency of core actions per week)

- Consistency (weeks active, not just spikes)

If you already track Feature Adoption Rate, those events typically become the building blocks for usage subscores.

Watch-outs:

- Usage can be seasonal (education, finance close cycles)

- Usage can be delegated (one operator drives value for a whole account)

- Usage can be decoupled from renewal (compliance products, infrastructure tools)

Value realization signals

Usage answers "are they in the product." Value signals answer "are they getting outcomes."

Examples:

- Reports delivered or exports consumed

- Integrations connected

- Time to first value event (related to Time to Value (TTV))

- Achieved business milestones (campaign launched, pipeline created, tickets reduced)

Value signals are especially important in enterprise, where a customer can log in weekly and still churn because nobody can prove ROI internally.

Billing and payment risk

Billing issues create churn that looks "mysterious" unless you track it explicitly. If you sell monthly, payment failures can be your fastest moving risk signal.

Common billing inputs:

- Failed payments, dunning status, days past due

- Invoice aging (conceptually similar to Accounts Receivable (AR) Aging)

- Recent downgrades or seat reductions (leading contraction)

Billing inputs are also how you catch involuntary churn earlier (see Involuntary Churn).

Support and friction

Support signals often predict churn when they represent blocked progress, not just "lots of tickets."

Better support inputs:

- Time to first response and time to resolution for high severity issues

- Reopened tickets

- Escalations

- Bug volume tied to core workflows

Be careful with raw ticket count. Power users often file more tickets because they are engaged.

Relationship and sentiment

This is the least "instrumented" category, and that's exactly why it matters for larger accounts.

Examples:

- Executive sponsor identified (yes or no)

- Champion change or stakeholder turnover

- QBR attendance

- CSM sentiment (structured, not free text)

- NPS or CSAT trend, if you collect it (see CSAT (Customer Satisfaction Score) and NPS (Net Promoter Score))

Sentiment should rarely dominate the score, but it can be the tie breaker when usage is noisy.

How to calculate it

You want three things at the same time:

- Comparable inputs (usage events and billing days cannot be added directly)

- Stable interpretation (a score change should mean something consistent)

- Explainability (teams must know what to do)

A practical structure is: normalize each component into a subscore, weight them, then scale to 0 to 100.

Step 1: Turn raw metrics into subscores

Pick a small set of components, then normalize each into a 0 to 1 subscore (or 0 to 100, but 0 to 1 is easier for weighting). A simple min to max normalization works well early on.

Where:

- low is the point where you consider the signal meaningfully bad

- high is the point where "more doesn't matter much"

- clamp prevents negative scores or scores above 1

Example: if weekly core actions below 3 is bad and 12 is great, then a customer with 6 weekly core actions gets a midrange usage subscore.

Step 2: Weight the subscores

Start with weights you can defend operationally, then validate them later. A common first pass is heavier weight on usage and value, lighter on sentiment.

If you sell annual contracts, you may downweight billing (fewer payment events) and upweight relationship and value. If you sell monthly SMB, billing might deserve more weight because it is both fast and predictive.

Step 3: Add "hard stops" sparingly

Many teams add rules like:

- If account is 30 days past due, cap score at 20

- If there is an open severity one incident, cap score at 40

Hard stops are useful when a single issue truly overrides everything else. Use them sparingly, because they can hide recovery (an account fixes payment and should rebound immediately).

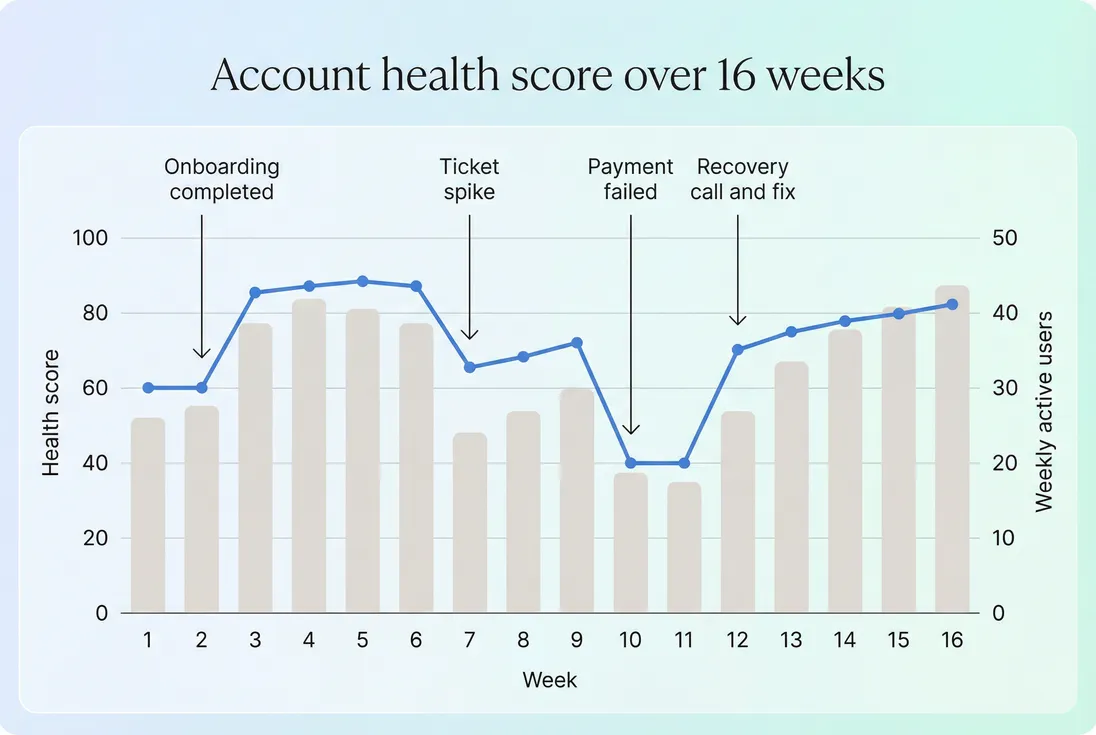

Step 4: Decide the time window

The score depends heavily on the lookback window:

- 7 day windows react fast but are noisy

- 28 day windows are stable but can lag

- Renewal window views (90 to 180 days before renewal) are often necessary for enterprise

You can keep one core score and add a renewal specific view for accounts nearing renewal.

The Founder's perspective

The right time window is the one your team can act on. If your CSM cycle time to intervene is two weeks, a daily score that whipsaws is entertainment, not control.

How to interpret changes

A health score is most dangerous when it is treated as absolute truth. Interpretation should be grounded in three comparisons.

Compare to the account's own baseline

A drop from 92 to 76 can be more important than a steady 62, depending on your product. Look at:

- Rate of change (how fast it moves)

- Duration (how long it stays low)

A good operating rule: trend plus duration beats a single point.

Compare within a segment

A score of 55 might be normal for customers in a seasonal segment, or catastrophic for others. Segment by:

- Plan tier or pricing model (see Per-Seat Pricing and Usage-Based Pricing)

- Customer size or ACV (see ACV (Annual Contract Value))

- Lifecycle stage (onboarding vs mature)

If you do not segment, you will end up penalizing the "quiet but satisfied" cohorts and missing the "active but unhappy" ones.

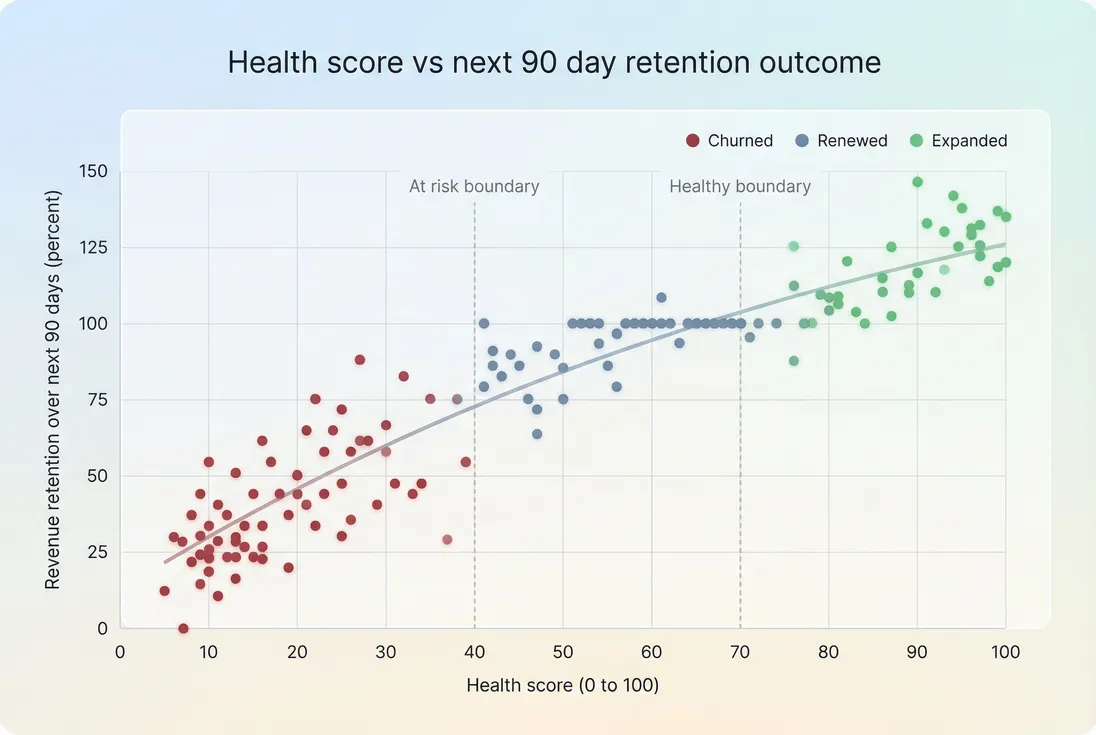

Compare to actual retention outcomes

Your score is only as credible as its connection to outcomes like:

- Renewal rate (see Renewal Rate)

- Revenue retention (see GRR (Gross Revenue Retention) and NRR (Net Revenue Retention))

- Churn events and reasons (see Churn Reason Analysis)

The easiest validation: bucket accounts by score band and measure future churn and expansion. You are looking for separation, not perfection.

How founders operationalize it

The score is a prioritization engine. It becomes valuable when you connect it to owners, playbooks, and dollars.

Prioritize by risk times value

A simple approach is to treat "at risk ARR" as a queue, not a report: which accounts below your threshold represent the most revenue exposure.

If you already track ARR (Annual Recurring Revenue) or MRR (Monthly Recurring Revenue), combine them with the score to rank work:

- Highest revenue and lowest health first

- Fastest deteriorating accounts next

- Long tail handled via scaled playbooks

This is also where founders should notice customer concentration. A single low health whale can dominate the quarter (see Customer Concentration Risk).

Attach component specific playbooks

A single generic "check in" playbook trains your team to do activity, not recovery. Map interventions to the failing component:

- Usage drop: re onboard, retrain, fix workflow friction, re align success criteria

- Value weak: deliver an ROI artifact, build an internal business case, run a milestone plan

- Billing risk: fix payment method, resolve invoicing, tighten dunning process

- Support friction: fast escalation, root cause fix, confirm resolution with customer

- Relationship risk: rebuild champion map, schedule exec alignment, document mutual plan

Your goal is not "raise the score." Your goal is "remove the driver," and let the score rise as a side effect.

The Founder's perspective

A health score without playbooks becomes a weekly debate about whether a customer is really at risk. A health score with playbooks becomes a production line: diagnose, assign, act, verify recovery.

Use it to improve the product, not just CS

Aggregate drivers across accounts:

- If many accounts go red due to the same workflow, you have a product adoption problem.

- If support friction drives health down repeatedly, you have reliability or usability debt (see Technical Debt and Uptime and SLA).

- If "value" subscores lag for one segment, your positioning or onboarding may be mismatched.

This is where health score connects to cohort learning. Pair it with Cohort Analysis to see whether newer cohorts reach "healthy" faster than older ones.

Tie it to renewal execution

For annual contracts, health score is most useful when it becomes a renewal timeline tool:

- 180 days out: validate stakeholders and success plan

- 90 days out: confirm value proof and expansion path

- 30 days out: remove blockers and finalize procurement

The score is not the renewal forecast; it is a way to focus the renewal effort where it will change the outcome.

When it breaks

Most health scores fail for predictable reasons. Here are the big ones, and how to fix them.

It measures activity, not value

If your score is mostly logins and sessions, you will miss accounts that are "busy but not winning." Fix: promote value realization signals, and make your usage measures reflect the core value path, not generic activity.

It is not calibrated to your churn window

A score that predicts churn "eventually" is not operationally useful. Fix: pick a target prediction window (often 60 to 120 days) and validate against that. If you care about renewals, use a renewal window view.

It is not segmented

A single global model is usually wrong across SMB, mid market, and enterprise. Fix: segment weights and thresholds by motion, or build separate models. Validate against segment level Logo Churn and NRR (Net Revenue Retention).

It gets gamed

If CSM compensation or reviews are tied to score, people will optimize the score rather than the customer outcome (for example, pushing low value actions that boost usage). Fix: tie incentives to retention outcomes and verified milestones, not the composite number.

It becomes stale

Products, pricing, and customers change. Your score will drift. Fix: review separation quarterly. If the low band is no longer meaningfully worse than the high band, revisit inputs, weights, and thresholds.

The Founder's perspective

A health score is a model, not a fact. Treat it like you treat pricing: set a baseline, test it against reality, then revise. The companies that win are not the ones with a perfect score, but the ones that iterate their score as the business evolves.

A simple starting template

If you are starting from zero, the goal is not sophistication. The goal is a score that is explainable and directionally correct.

Starter components and weights

Use 5 components, each normalized to 0 to 1.

| Component | What it captures | Typical weight |

|---|---|---|

| Usage | Core workflow frequency and consistency | 0.30 |

| Value | Key outcome events and milestones | 0.25 |

| Billing | Past due risk, failed payments | 0.20 |

| Support | Blockers, severity, unresolved issues | 0.15 |

| Sentiment | Champion strength, survey trend, CSM judgment | 0.10 |

Then set first pass thresholds:

- 70 to 100 (healthy): scaled engagement, expansion discovery

- 40 to 69 (watch): diagnose component drops, targeted nudges

- 0 to 39 (at risk): owner assigned, explicit recovery plan

Make it actionable in one meeting

In your weekly retention meeting, you should be able to answer:

- Which accounts are red that matter financially (by ARR or MRR)?

- What component is driving each red account?

- What is the next action and who owns it?

- Did last week's actions move the driver and the score?

If you cannot answer those four, the score is not yet an operating tool.

Validate with real retention metrics

Within your first month, run a simple backtest:

- Compare score bands vs churn and downgrades

- Compare score bands vs expansion (upsells tend to cluster in healthy accounts)

- Review mismatches and add missing signals

Use retention reporting as the ground truth: Customer Churn Rate, MRR Churn Rate, and GRR (Gross Revenue Retention). The score should help you change those numbers, not replace them.

If you want one guiding principle: a customer health score is only "good" if it changes who your team talks to this week, and what they do in those conversations.

Frequently asked questions

Backtest it. Bucket accounts into score bands (for example 0 to 39, 40 to 69, 70 to 100) and measure future outcomes like churn, downgrade, renewal rate, and expansion within a fixed window (often 60 to 120 days). A useful score creates clear separation: low band churns far more than high band.

There is no universal benchmark because inputs differ by product and segment. A practical starting point is green at 70 to 100, yellow at 40 to 69, red at 0 to 39. Then adjust thresholds until each band maps to a meaningful business action and a measurable difference in renewal and churn outcomes.

Usually not. SMB health tends to be usage and billing driven, while enterprise health is often stakeholder and value realization driven. Create segment specific models or at least segment specific weighting and thresholds by plan, ACV, or sales motion. Validate separately using segment level retention metrics like NRR and logo churn.

Recalculate on a cadence that matches how quickly risk emerges and how quickly you can respond. Many SaaS teams update daily for product usage and billing signals, and weekly for support and sentiment inputs. For enterprise renewals, add a stronger renewal window view that tightens focus inside 90 to 180 days of renewal.

Don't start with generic outreach. First diagnose which components fell: usage, value, billing, support friction, or relationship risk. Then run a targeted playbook tied to that component, with a clear owner and next step. Track whether the score recovers and whether the account renews or expands afterward.