Table of contents

Churn reason analysis

Churn is expensive—but "our churn rate is 3%" doesn't tell you what to fix. Founders win by knowing why customers leave, which reasons are growing, and which ones threaten the most revenue. That's what churn reason analysis is for: it turns churn from a lagging metric into a prioritized worklist.

Churn reason analysis is the practice of categorizing cancellations and downgrades into a consistent set of reasons, then measuring the share and impact of each reason over time and across segments (by customer count and by revenue).

This pairs naturally with your core churn and retention metrics like Customer Churn Rate, Logo Churn, MRR Churn Rate, and NRR (Net Revenue Retention). Those tell you how much you're losing; churn reason analysis tells you what to do next.

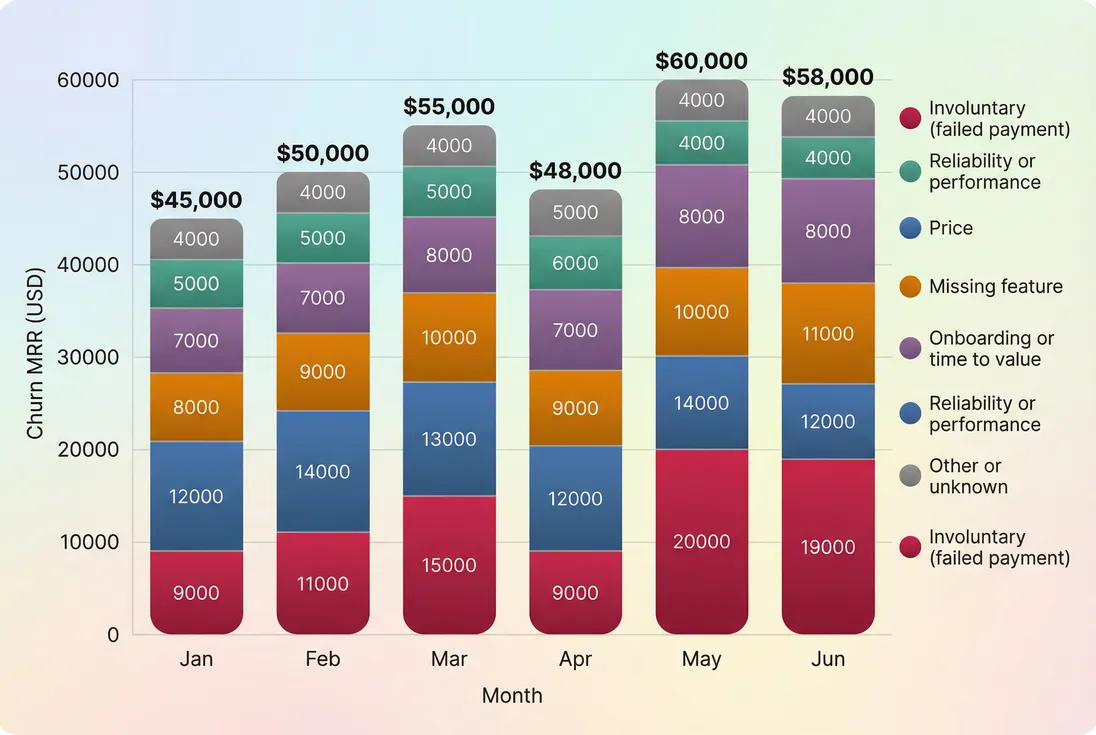

[Monthly churn MRR split by reason shows whether churn is rising because of fixable product and value issues or operational issues like failed payments.]

What churn reason analysis reveals

Most churn dashboards answer "how bad is it?" Churn reason analysis answers:

- What is driving churn right now

- Which reasons are concentrated in specific segments

- Whether churn is controllable (and how fast)

- Where to invest: product, pricing, onboarding, support, or billing ops

A key point: churn reasons are rarely evenly distributed. In many SaaS businesses, two or three reasons drive most churn MRR. Your job is to find those reasons reliably, then reduce them with targeted changes.

The Founder's perspective

If churn reason analysis doesn't change what you ship, how you price, or how you onboard in the next 30 days, you're collecting trivia. The output should be a short list of decisions: what to stop doing, what to fix, and which segment to avoid or reprice.

How to calculate reason impact

There's no universal "churn reason metric" like MRR. Instead, you build a small set of reason impact views that are consistent month to month.

Step 1: define what counts as churn

Be explicit about the events you include:

- Logo churn events: an account fully cancels.

- Revenue churn events: MRR decreases from cancellations and downgrades (often tracked alongside Contraction MRR).

- Involuntary churn events: service stops due to failed payment (see Involuntary Churn).

The reason taxonomy should work for cancellations and downgrades. If downgrades are common, don't ignore them—many "missing feature" or "price" issues show up as contraction before cancellation.

Step 2: create a reason taxonomy

A practical starter taxonomy (mutually exclusive, one primary reason per event):

- Involuntary (failed payment)

- Price

- Missing feature

- Onboarding or time to value

- Reliability or performance

- Support or service quality

- Switching to competitor

- Customer business changed (shutdown, budget cut)

- Security or compliance gap (B2B)

- Other or unknown

You can tailor this by business model:

- PLG products often need sharper "activation" and "time to value" categories.

- Enterprise products often need "security, compliance, procurement" categories.

Step 3: compute shares by logos and by MRR

At minimum, track reason mix in two ways:

Reason share by churned customers (logos):

Reason share by churned MRR (revenue impact):

Why both matter:

- Logo view highlights onboarding issues and low-end mismatch.

- MRR view highlights enterprise risk and budget-driven churn.

Here's a simple way to interpret mismatches:

| Pattern | What it usually means | What founders do |

|---|---|---|

| High logo share, low MRR share | Many small accounts leaving | Fix onboarding, tighten ICP, adjust low-tier packaging |

| Low logo share, high MRR share | A few big accounts leaving | Executive save plays, roadmap focus, reduce concentration risk |

| High in both | Systemic issue | Treat as top company priority |

To connect this back to financial outcomes, review churn reason mix alongside MRR (Monthly Recurring Revenue) movements and retention metrics like GRR (Gross Revenue Retention) and Net MRR Churn Rate.

Step 4: trend it over time (and annotate)

Churn reason analysis becomes useful when you can answer: "What changed?"

Common drivers of changes in reason mix:

- Pricing changes, packaging, discounts (see Discounts in SaaS)

- A new competitor, or a competitor's pricing change

- Reliability incidents (uptime, performance regressions)

- A shift in acquisition channel or target segment

- Billing failures rising (card updater gaps, dunning flows)

If you can't tie a reason spike to a plausible business event, assume your classification quality is drifting.

What patterns founders should look for

The goal isn't perfect truth. The goal is decision-grade signal.

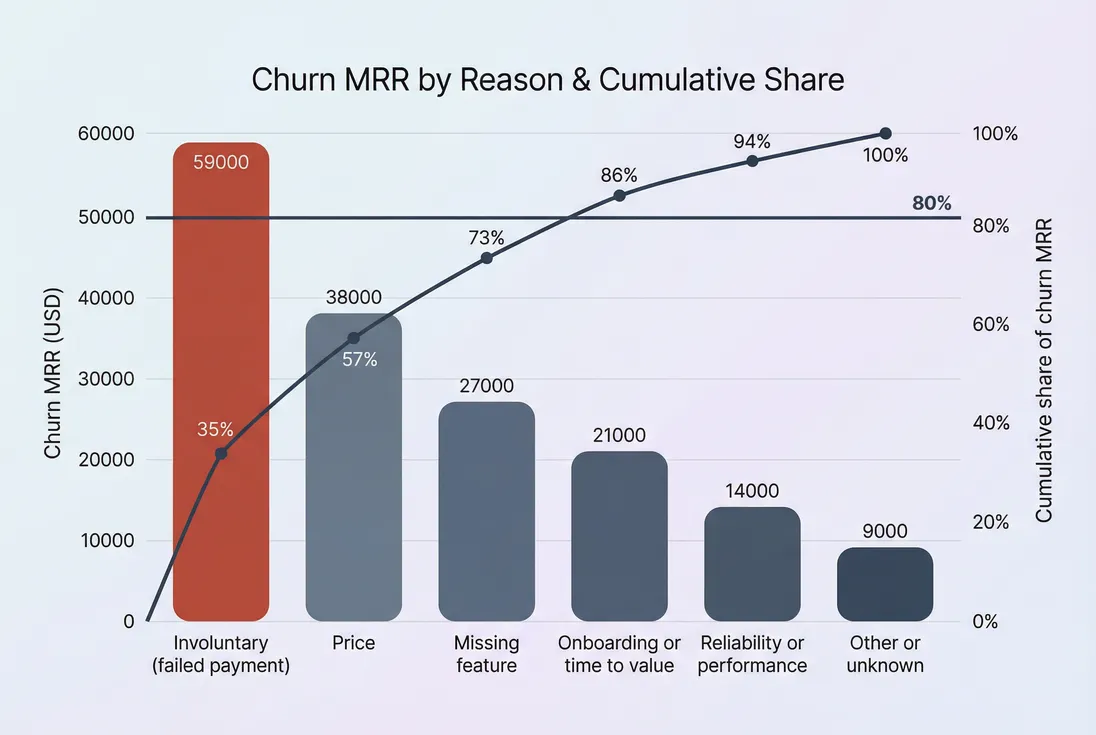

Pareto: which reasons drive most loss

Most teams benefit from a monthly Pareto view: reasons sorted by churn MRR, with a cumulative percentage line. It forces prioritization.

[A Pareto view makes churn reasons actionable by showing the few categories that drive most churn MRR and should get prioritized attention.]

Mix shifts: reason share moving, not just totals

A trap: churn MRR can stay flat while the reason mix deteriorates.

Example:

- Total churn MRR is steady at $50k/month.

- "Involuntary" grows from 15% to 35%.

- That is often a fixable ops problem (dunning, payment retries), not product-market fit.

Similarly:

- "Missing feature" growing inside one segment usually points to a packaging gap or positioning mismatch more than "build everything."

Segment concentration: where reasons cluster

Churn reasons are most valuable when segmented. Common cuts:

- Plan / tier (ties to ASP (Average Selling Price) and ARPA (Average Revenue Per Account))

- Customer age or tenure (early churn vs late churn)

- Cohort (see Cohort Analysis)

- Industry (especially B2B)

- Seats or usage band (for per-seat or usage-based models)

- Acquisition channel (self-serve vs sales-led)

A practical pattern many founders see:

- First 30 to 90 days churn: onboarding, time to value, wrong ICP.

- Later churn: price-to-value, missing enterprise features, security, competitive displacement.

The Founder's perspective

I care less about the global churn reason chart and more about "what is the top churn reason for the segment we're trying to grow next quarter." If the answer is different, our roadmap and CS motions should be different.

MRR-weighted vs logo-weighted disagreements

When the two views disagree, don't average them—investigate.

Concrete scenario:

- 40 churned customers last month.

- 18 selected "Price" (45% logo share).

- But those 18 were mostly $49/month plans, so only 12% of churn MRR.

- Meanwhile, "Security or compliance" is only 2 customers (5% logos) but 35% of churn MRR.

Founder takeaway: price work might reduce noise churn and support load, but security work protects growth and enterprise credibility.

Turning reasons into decisions

Churn reason analysis only matters if each top reason maps to an owner, a hypothesis, and an experiment.

Decision playbooks by common reason

Involuntary (failed payment)

- What it usually means: dunning gaps, card updater missing, invoice friction, payment method mismatch.

- What to do: improve retries, add payment methods, tighten dunning cadence, alert CS on high-MRR failures.

- Metric to watch: Involuntary Churn share of churn MRR.

Price

- What it usually means: price-to-value mismatch for a segment, weak differentiation, or customers on the wrong tier.

- What to do:

- Audit discounting (see Discounts in SaaS)

- Repackage (limit features that create support load)

- Add annual options or longer commitments (see Average Contract Length (ACL))

- Watch: churn reasons split by tier and customer age (price objections right after renewal vs right after signup mean different things).

Missing feature

- What it usually means: a narrow but important workflow gap; sometimes a positioning issue.

- What to do:

- Validate with a "top lost deals and churned accounts" review

- Add workaround education if a feature already exists but isn't discovered

- Ship selectively based on churn MRR at risk, not loudness

- Watch: churn reason mix by segment and ACV (see ACV (Annual Contract Value)).

Onboarding or time to value

- What it usually means: activation is too hard, setup is unclear, or customers don't hit the "aha" moment quickly.

- What to do:

- Redesign onboarding around one success milestone

- Reduce time-to-first-value (see Time to Value (TTV))

- Add lifecycle messaging and success check-ins

- Watch: churn within first 30 to 60 days, plus product adoption leading indicators.

Reliability or performance

- What it usually means: real downtime, slow performance, or trust erosion.

- What to do:

- Tie incidents to churned MRR at risk

- Invest in stability work and communicate it clearly

- Improve monitoring and incident response

- Watch: churn reason spikes after incidents; retention of high-usage accounts.

A simple prioritization score

To keep prioritization honest, use a basic impact framing:

- Churn MRR impacted (how much revenue this reason drives)

- Confidence (how reliable the classification is)

- Control (how quickly you can reduce it)

You don't need complex math—just avoid treating "10 customers said price" the same as "$80k churn MRR due to security gaps."

The Founder's perspective

If a churn reason is painful but uncontrollable (customer shutdown), I don't ignore it—I use it to refine segmentation, contract terms, and diversification. The decision is still real, just different.

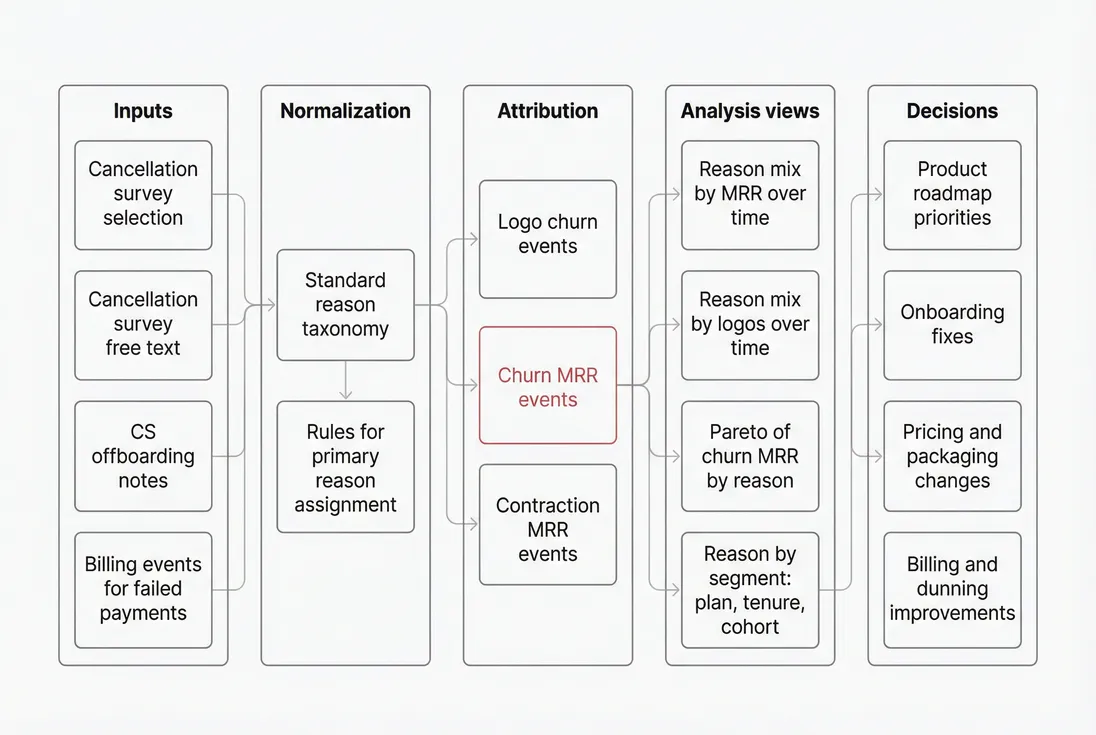

Building a reliable churn reason system

Most churn reason analysis fails because inputs are messy. Here's how to make it trustworthy enough to drive roadmap and go-to-market choices.

Capture: get the reason at the right moment

Best sources, in order of consistency:

- Cancellation flow (self-serve) with required structured selection + optional free text

- CS offboarding notes with a forced primary reason

- Support ticket tagging for cancellations initiated via email

- Sales notes for non-renewals (enterprise)

- Billing events for involuntary churn classification

Practical tips:

- Always allow "Other" but never allow it to be the default.

- Keep reason labels stable; store internal IDs behind the scenes.

- Store both "customer stated reason" and "internal final reason."

Normalize: prevent taxonomy drift

Taxonomy drift is when the team slowly changes meaning without noticing:

- "Missing feature" becomes a catch-all.

- "Price" is used when the customer actually didn't adopt.

A lightweight fix: a monthly 30-minute audit:

- Review the top 10 churned accounts by MRR.

- Confirm their reason assignment with notes and usage context.

- Update guidelines, not the historical data (unless it's clearly wrong).

Attribute: cancellations vs downgrades

If you sell usage-based or seat-based pricing, contraction can be a leading indicator of churn. Track reasons for:

- Full cancellation

- Downgrade (plan change)

- Seat reduction (if it materially reduces MRR)

This connects churn reason work to expansion and contraction dynamics (see Expansion MRR and Contraction MRR).

Analyze: build the few views you'll actually use

A founder-grade churn reason dashboard typically includes:

- Reason share (MRR) trend by month

- Reason share (logos) trend by month

- Pareto of churn MRR by reason (last month, last quarter)

- Top reasons by segment (plan, cohort, tenure, industry)

If you're using GrowPanel, this is where features like MRR movements, customer list, and filters help you move from a chart to the actual accounts behind the numbers (see MRR movements and Filters). The workflow that matters is: spot a reason spike → open the customer list → read patterns → decide an intervention.

Operationalize: close the loop to action

Your churn reason analysis should feed a recurring operating cadence:

- Monthly retention review (founder, CS lead, product lead)

- Top two churn reasons by MRR: assign owners and a 30-day plan

- One "save" motion update (what to do when this reason appears mid-cycle)

- One product or onboarding change tied to the biggest driver

[A consistent churn reason system connects raw cancellation inputs to normalized reporting and then to specific decisions across product, success, pricing, and billing ops.]

When churn reason analysis breaks

A few failure modes show up repeatedly.

Unknown dominates

If "Other or unknown" is your largest category, you don't have churn reason analysis—you have a suggestion box.

Fixes that work:

- Make a structured reason required in the cancel flow

- Add "involuntary" as an automatic classification from billing events

- Force CS to pick a primary reason in the offboarding template

- Audit the top churn MRR accounts monthly until unknown drops

Customers lie (or simplify)

Customers often choose socially acceptable reasons:

- "Too expensive" instead of "we didn't adopt"

- "Missing feature" instead of "we bought the wrong tool"

That's normal. Treat "customer stated reason" as a lead, then validate with:

- Tenure (early vs late)

- Usage and adoption

- Support history

- Plan fit

Reasons are not mutually exclusive

Real churn is multi-causal. But analysis needs a primary label.

Rule of thumb for primary reason:

- Pick the reason that, if solved, most likely would have prevented churn.

- If none, pick "Customer business changed" or "Other," and capture details in notes.

You optimize the wrong thing

If you only optimize for logo-based reasons, you can end up improving low-tier retention while enterprise churn MRR worsens.

Always review reason mix against:

The minimum viable churn reason cadence

If you want this to be lightweight and effective, run it like this:

- Weekly: tag every churn and downgrade with a primary reason (even if provisional).

- Monthly: review top churn reasons by churn MRR and the top 10 churned accounts by MRR.

- Quarterly: refine taxonomy (sparingly), validate top reasons with calls, and update save plays.

The win condition is simple: your top churn reasons become predictable, measurable, and actionable—and your roadmap and go-to-market choices reflect that.

The Founder's perspective

I don't need perfect truth. I need a stable, repeatable signal that tells me where churn is coming from, which segment it's concentrated in, and what intervention has the highest expected return this quarter. Churn reason analysis is the system that makes that possible.

Frequently asked questions

Start with 6 to 10 reasons plus unknown. Fewer than 6 becomes vague, more than 12 becomes inconsistent. Make reasons mutually exclusive, define each in one sentence, and review monthly. You can always add a reason later, but changing definitions breaks trends.

Use both. Customer stated reasons are directional but often incomplete. Observed signals like low usage, failed payments, or lack of key feature adoption help validate and reclassify. A practical rule is to store the customer answer and a final internal reason used for reporting.

Early on, unknown is often 30 to 60 percent because teams do not capture data consistently. A solid target is under 20 percent within two quarters, achieved by a better cancel flow, required fields for success teams, and periodic audits of the top churned accounts.

You need both. Logo weighted reasons tell you what is breaking at the low end of the market and in onboarding. MRR weighted reasons tell you what threatens growth and runway. Many teams mistakenly optimize for logo churn while enterprise MRR churn is the real risk.

Look for repeatability and concentration. If a reason is above 15 percent of churn MRR for two or three months, or spikes within a specific segment, treat it as actionable. One off reasons from a few tiny accounts are usually noise unless they predict a larger segment issue.