Table of contents

CES (Customer effort score)

Founders care about CES because effort is a hidden tax on growth: it increases support load, slows onboarding, and quietly raises churn risk even when customers say they're "satisfied."

Customer Effort Score (CES) is a survey metric that measures how easy or hard it was for a customer to complete a specific task or interaction—like setting up the product, resolving a support issue, or updating billing.

What CES actually measures

CES is not "how much customers like you." It's "how much work customers had to do."

The key is scope: CES should be tied to a specific moment, such as:

- Completing onboarding

- Getting a support issue resolved

- Finding an answer in docs

- Upgrading or changing plans

- Fixing a billing problem

- Canceling (yes, measure this too—high effort cancellation creates chargebacks, bad reviews, and support tickets)

If you ask CES as a vague, general survey ("How easy is our product?"), you'll get noisy data that's hard to improve.

Common CES question formats

Two patterns dominate:

Agreement scale (higher is easier)

- "The company made it easy for me to resolve my issue."

- Often 1 to 7: strongly disagree → strongly agree

Difficulty scale (lower is easier)

- "How easy was it to complete X?"

- Often 1 to 5: very difficult → very easy (or the reverse)

Pick one format and stick to it. Changing the wording or direction breaks trend comparisons.

How to calculate CES (without overcomplicating it)

At its simplest, CES is just an average of numeric responses for a defined touchpoint and time window.

That's enough for weekly monitoring, but founders usually need two additions:

1) Reverse scoring (if your scale runs "difficult → easy")

If your scale is coded so that higher numbers mean more difficult, reverse it so "higher is better" (or vice versa). What matters is consistency.

2) Top-box rate (often more operationally useful than averages)

Averages hide whether you have a "meh" experience or a polarized one. Track the share of customers reporting very low effort.

For a 1 to 7 agreement CES, "top options" often means 6 or 7. For a 1 to 5 ease CES, it might mean 4 or 5 (depending on your labeling).

Where founders get real value from CES

CES is most useful when it answers one of these operational questions:

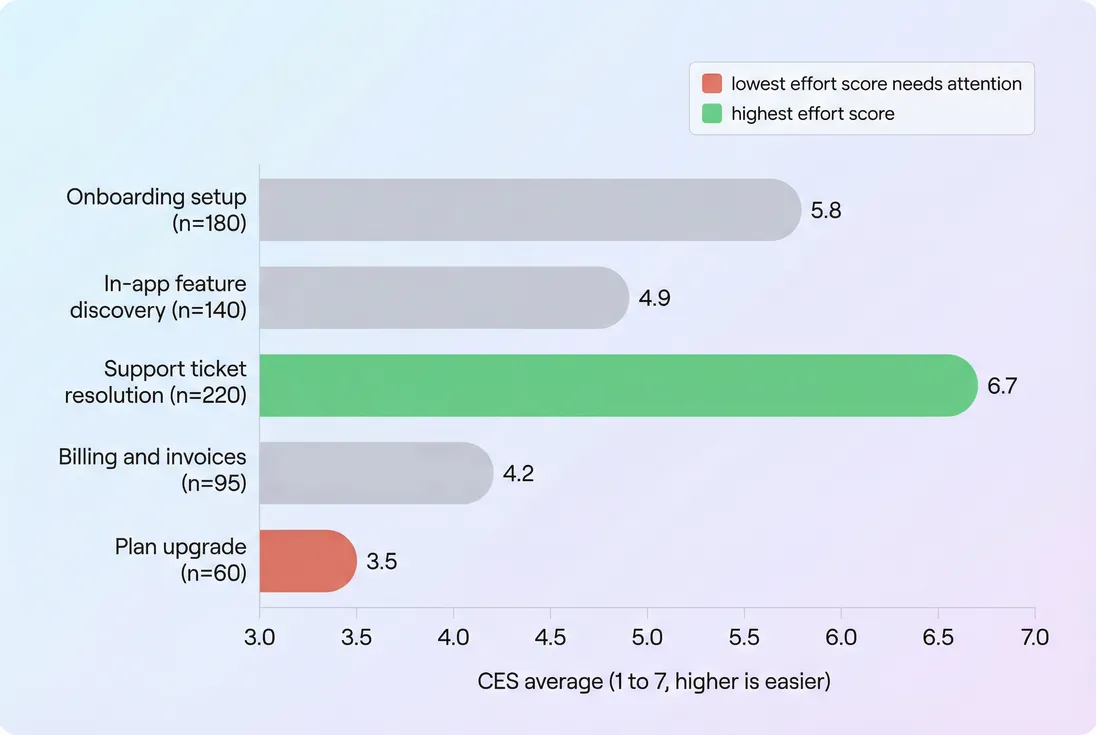

1) Which journey step is costing us the most?

A single global CES number is rarely actionable. You want CES by touchpoint, because each touchpoint has different owners and fixes.

Once you see the ranking, you can assign the right work:

- Low onboarding CES → activation friction, missing templates, confusing setup, weak guided paths

Pair with Onboarding Completion Rate and Time to Value (TTV). - Low billing CES → invoice confusion, failed payments, tax/VAT surprises, self-serve gaps

Pair with Involuntary Churn, Refunds in SaaS, and VAT handling for SaaS. - Low support CES → long time-to-resolution, too many back-and-forths, unclear escalation paths

Pair with Customer Health Score and Churn Reason Analysis.

The Founder's perspective,

Treat CES like a routing signal for company attention. If billing CES drops, it's not a "CX issue"—it's a revenue risk (failed renewals, refunds, disputes). If onboarding CES drops, your CAC payback worsens because customers take longer to reach value. CES helps you decide what to fix this sprint, not what to admire on a dashboard.

2) Is effort driving churn (or just annoying people)?

CES becomes dramatically more useful when you connect it to retention outcomes. The goal isn't "high CES." The goal is higher retention and expansion at the same acquisition spend.

Practical ways to connect CES to outcomes:

- Segment accounts by CES band (for a consistent touchpoint): for example 1.0–4.4, 4.5–5.4, 5.5–7.0

- Compare each band's:

- Logo churn (see Logo Churn)

- Revenue retention (see GRR (Gross Revenue Retention) and NRR (Net Revenue Retention))

- Net churn (see Net MRR Churn Rate)

A typical pattern in SaaS: CES is a leading indicator, especially for early lifecycle churn (first 30–90 days) and for "silent churn" segments that don't complain—they just cancel.

If you want one simple analysis that often works: run CES for onboarding and support, then view retention by cohort. CES issues usually show up as weaker early cohorts in Cohort Analysis.

3) Are we fixing the right thing—or changing who answers?

CES is sensitive to case mix and sampling, so you need to interpret changes carefully.

A CES drop can come from:

- Real friction increase

- confusing UX change

- breaking change or bugs

- slower support response

- more steps to complete a task

- Customer mix shift

- more new customers (who struggle more)

- more enterprise customers (with more complex requirements)

- more "stuck" customers reaching support

- Channel shift

- more tickets coming from a hard segment (API, SSO, integrations)

- fewer "easy wins" responding

Before you declare victory or panic, check:

- Response count and response rate (did volume fall?)

- Touchpoint mix (did you measure the same interaction?)

- Segment mix (plan, industry, lifecycle stage)

- Distribution (are you getting more 1–2 scores, or is everything sliding?)

Averages can move even when the underlying distribution got worse in a specific band.

Benchmarks founders can actually use

CES benchmarks are messy because scales differ. Still, you can use pragmatic targets.

Recommended interpretation bands (keep your scale consistent)

| CES scale | "Good" | "Watch" | "Problem" |

|---|---|---|---|

| 1–7 agree (higher is easier) | 5.5 to 7.0 | 4.8 to 5.4 | below 4.8 |

| 1–5 ease (higher is easier) | 4.2 to 5.0 | 3.6 to 4.1 | below 3.6 |

| 1–5 difficulty (lower is easier) | 1.0 to 2.0 | 2.1 to 2.6 | above 2.6 |

Two rules that matter more than the "industry average":

- Benchmark against yourself by touchpoint. Onboarding CES and billing CES don't share the same ceiling.

- Track top-box rate alongside the average. Most SaaS improvements show up as "fewer terrible experiences," not just a slightly higher mean.

The Founder's perspective,

Don't spend cycles debating whether 5.3 is "good." Decide what a 0.3 change is worth. If raising onboarding CES by 0.3 reduces early churn enough to lift LTV (Customer Lifetime Value) by 10 percent, that's a product priority. If it doesn't move retention, treat it as support efficiency or brand hygiene.

What moves CES (and how to improve it)

CES is driven by steps, clarity, and recovery—not by "delight."

The biggest drivers in SaaS

- Number of steps

- Too many screens, fields, approvals, confirmations

- Time to complete

- Waiting for data imports, provisioning, human approvals

- Cognitive load

- unclear terminology, too many choices, poor defaults

- Error handling

- cryptic errors, dead ends, no next step

- Handoffs

- "Talk to sales," "email support," "we'll get back to you"

- Expectation management

- customers thought it would be self-serve; it isn't

- Rework

- having to repeat info, re-upload files, restate the issue

Practical fixes that reliably lift CES

- Kill steps: remove fields, auto-detect data, progressive setup

- Add "next best action": after any failure, show the fastest recovery path

- Reduce back-and-forth: pre-fill support forms with account context and logs

- Create predictable paths: templates for common use cases

- Set honest expectations: during onboarding, set time and requirements clearly

CES is especially useful for prioritizing "paper cut" work: changes that aren't big features but reduce friction across many customers.

How to operationalize CES in a founder-friendly way

You want a CES system that produces decisions weekly, not a quarterly report.

Step 1: Pick 3–5 mission-critical touchpoints

Start with:

- onboarding completion

- first value moment (activation)

- support resolution

- billing issue resolution

- upgrade or plan change

If you're early stage, focus on onboarding and support. If you're scaling, billing and upgrades become equally important.

Step 2: Trigger the survey at the moment

Good CES timing is "right after the work," not days later.

Examples:

- after ticket is marked solved

- after onboarding checklist completion

- after successful payment retry

- after plan upgrade confirmation

Keep it to one question plus an optional comment. If you want context, add one multiple-choice follow-up like "What made this hard?" but don't turn CES into a long form.

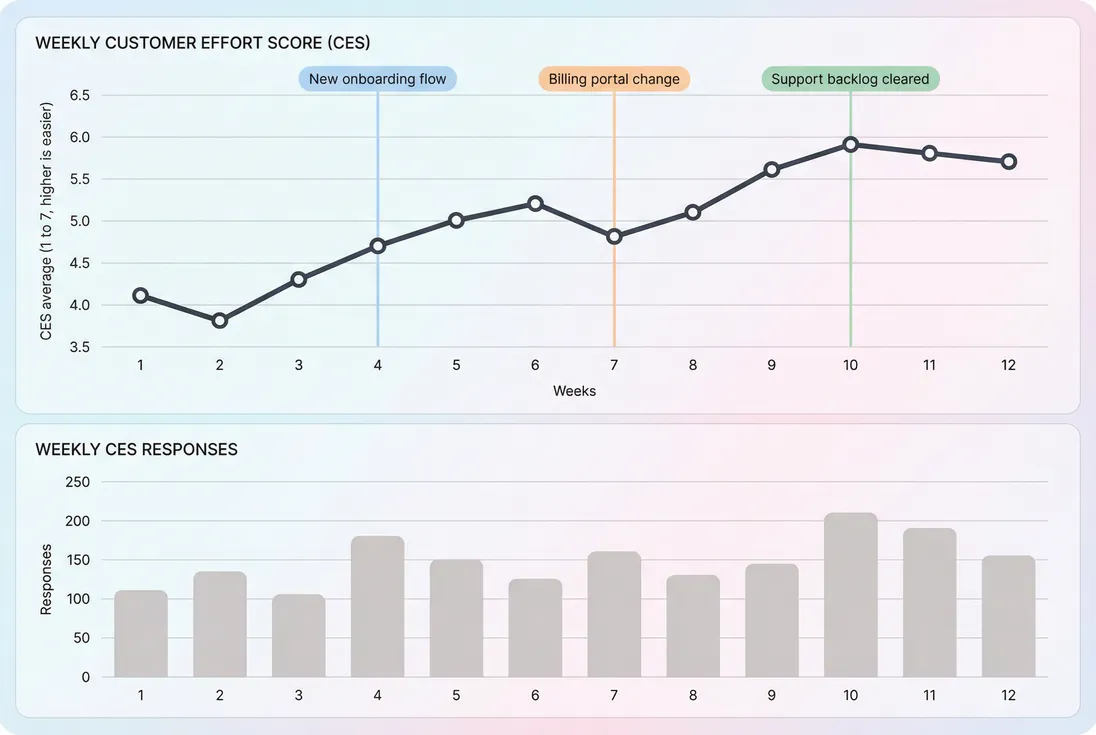

Step 3: Review weekly, fix monthly

A simple operating cadence:

- Weekly: watch for spikes/drops by touchpoint, scan comments, spot regressions

- Monthly: prioritize fixes, ship changes, then watch the next 2–4 weeks for movement

CES works best as a "closed loop": measure → decide → fix → re-measure.

Step 4: Tie CES to the metrics you run the business on

CES is not a revenue metric, but it should influence revenue decisions.

Connect it to:

- retention metrics like Retention, Customer Churn Rate, and MRR Churn Rate

- expansion behavior like Expansion MRR

- pricing and packaging changes (customers often report higher effort when packaging is confusing; see Discounts in SaaS and Per-Seat Pricing)

If CES improves but churn doesn't, you may be measuring the wrong touchpoint—or your churn is driven by value/pricing rather than friction.

When CES breaks (and how to avoid bad decisions)

CES is easy to misuse. Common failure modes:

You measure "effort" too late

If you ask after the memory fades, responses become emotional summaries, not effort measurement. That starts to overlap with CSAT (Customer Satisfaction Score) or NPS (Net Promoter Score).

You average across unlike experiences

Mixing "reset password" with "debug API integration" will always produce confusing trends. Segment by touchpoint and complexity.

You chase the number instead of the comments

CES is a signal; the "why" is in the verbatims and categorical reasons. Treat comments as a backlog source:

- unclear UI labels

- missing docs

- confusing pricing boundary

- slow response

- bug or downtime (pair with Uptime and SLA)

You change the scale midstream

If you change the question or scale direction, you lose trend integrity. If you must change it, run both for 2–4 weeks and create a mapping.

The Founder's perspective,

CES is a leverage metric. It tells you where small improvements reduce churn, reduce support cost, and speed adoption at the same time. But only if you treat it like instrumentation: stable definitions, consistent triggers, segmented reporting, and a clear owner for each low-scoring touchpoint.

CES vs CSAT vs NPS (quick guidance)

Use each where it's strongest:

- CES: friction and process diagnosis (best for onboarding, support, billing)

- CSAT: satisfaction with an interaction (best right after support or training)

- NPS: relationship and brand loyalty (best quarterly, paired with churn analysis)

If you're choosing just one to start: pick CES when you already know where the customer gets stuck (or you suspect they do), and you want a metric that points to specific fixes.

If you implement CES thoughtfully—by touchpoint, consistently triggered, and tied back to retention—you'll stop arguing about "customer experience" in the abstract and start making concrete tradeoffs that protect growth.

Frequently asked questions

It depends on the scale and wording. On a 1 to 7 agree scale like made it easy, strong SaaS teams often see averages above 5.2 with a top box rate above 60 percent. On a 1 to 5 difficulty scale, averages below 2.0 are typically healthy. Compare by touchpoint, not globally.

CES is best for diagnosing friction in specific journeys like onboarding, support, or billing. CSAT captures how people feel about an interaction, while NPS reflects broader brand loyalty. Many SaaS teams run CES after key tasks, CSAT after support, and NPS quarterly. Tie them back to retention and churn outcomes.

Measure CES continuously on high impact touchpoints, not as a one time survey. Trigger it after ticket resolution, after onboarding completion, after a billing change, and after a failed attempt like an error or payment decline. Review weekly for operations and monthly for trends. Keep questions and scales stable over time.

Sudden drops are often caused by product changes, pricing or packaging confusion, billing friction, or support process slowdowns. First verify sampling changes such as more complex tickets or different customer mix. Then segment by touchpoint, plan, and channel to isolate the driver. Treat CES drops like incident response with clear owners and fixes.

Link CES to leading indicators and outcomes. Segment CES by customer cohort, plan, and lifecycle stage, then compare with logo churn, gross retention, and expansion behavior. Look for thresholds where churn risk spikes, like CES below 4.5 on a 1 to 7 scale. Use CES to prioritize fixes that protect retention and reduce support cost.