Table of contents

Average session duration

Founders care about average session duration because it's one of the fastest "smoke signals" for whether users are getting ongoing value—or bouncing before they reach it. But it's also easy to misread: a longer session can mean love, or it can mean confusion.

Average session duration is the average amount of time a user spends in your product during a single visit (a "session"), measured over a defined period (day, week, month).

What average session duration reveals

Average session duration is an engagement intensity metric. It helps you answer questions like:

- Are users spending enough time to reach value in the product?

- Are new users getting lost (long sessions, low progress)?

- Are experienced users developing a habit (consistent time patterns)?

- Did a release change how people work (up or down)?

The catch: session duration is only meaningful when you anchor it to what users are trying to do.

- In workflow SaaS (invoicing, ticketing, scheduling), shorter sessions can be better if users complete tasks quickly.

- In exploratory tools (analytics, design, research), longer sessions often indicate deeper adoption.

- In communication/collaboration, duration can rise simply because the app is left open all day.

That's why founders get the most value from session duration when they segment it and tie it to outcomes like activation, conversion, and retention. Pair it with metrics such as Active Users (DAU/WAU/MAU) and DAU/MAU Ratio (Stickiness) to separate "time per visit" from "how often they return."

The Founder's perspective

Session duration is rarely a north-star metric. It's a diagnostic. When it shifts, you're looking for the operational reason: did we change onboarding, performance, pricing, or the customer mix—and did outcomes move with it?

How sessions are defined

Before you interpret the number, be clear about what your analytics tool considers a "session." Most sessionization comes down to three decisions:

Session start

Common approaches:

- Web pageview based: a session starts when a user loads the app.

- Event based: a session starts on the first tracked event (login, view, click).

- App foreground based (mobile/desktop): a session starts when the app becomes active.

Session end

A session ends when one of these happens:

- The user closes the app or navigates away (not always reliably detectable).

- The last tracked event is followed by an inactivity gap.

- A fixed cutoff is hit (less common, but sometimes used in call-center style apps).

Inactivity timeout (the most important part)

Most products use an inactivity timeout (often 15–30 minutes). Without it, "tab left open" becomes "user engaged," and your averages become fiction.

A simple calculation looks like this:

Where:

- \text{session duration} is computed per session based on your start/end rules

- \text{number of sessions} is the count of sessions in the time window

Mean vs median (don't skip this)

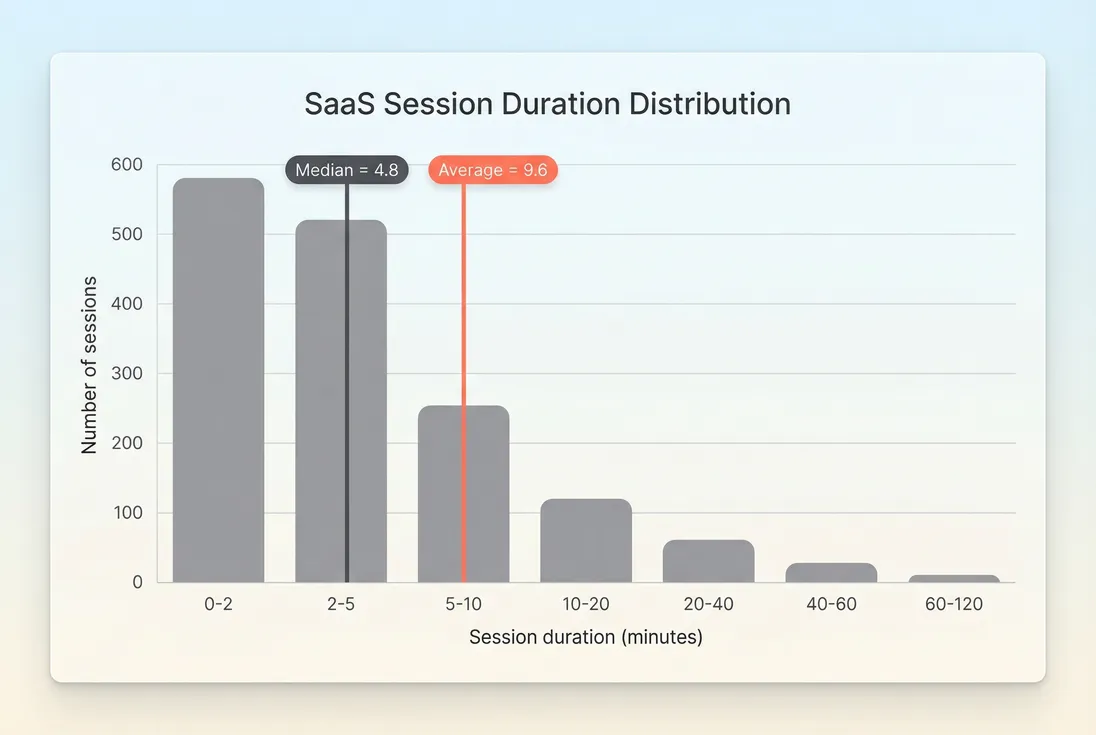

Session duration usually has a long tail: many short sessions, and a few extremely long ones (often caused by idle tabs or background processes). That makes the average (mean) volatile.

In practice, founders should track:

- Median session duration (typical user experience)

- Average session duration (sensitive to outliers; good for catching instrumentation issues)

- Percentiles like p75/p90 (how power users behave)

The Founder's perspective

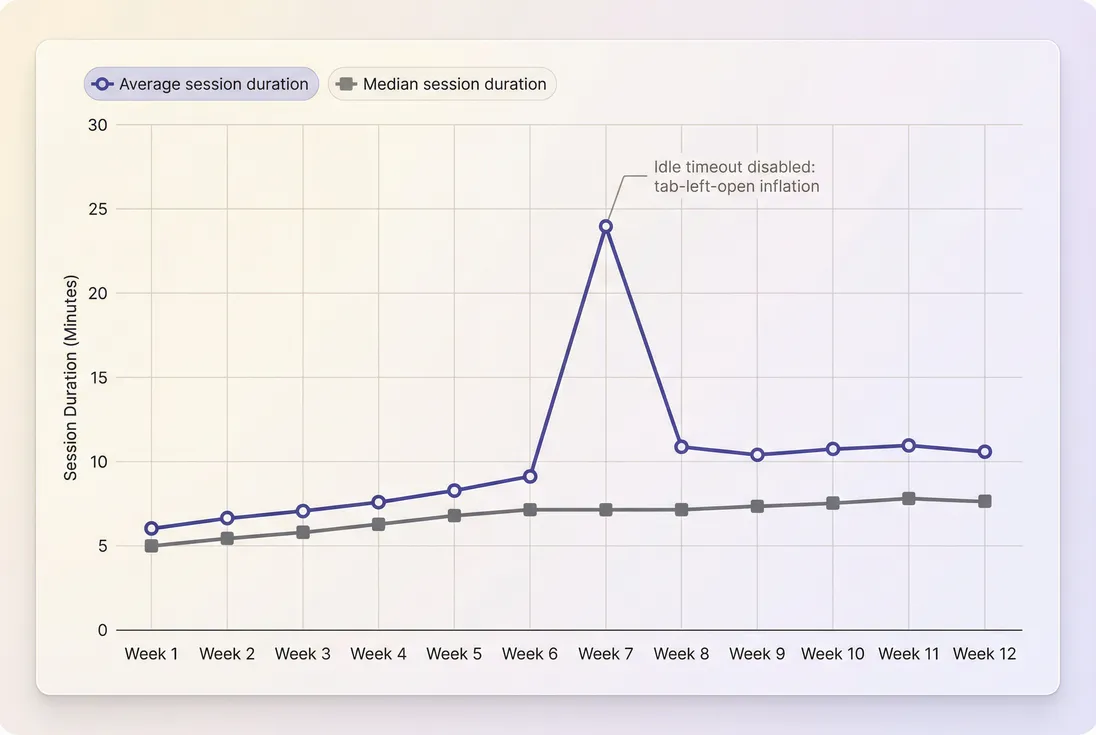

If your average jumps but your median doesn't, assume measurement error or idle inflation before you assume users suddenly got more engaged.

Benchmarks and segmentation

Benchmarks are only useful when you compare like-for-like: same persona, same lifecycle stage, same platform, similar workflow.

Here are ranges that are directionally useful if your tracking is clean (timeouts set, bots excluded, single-page app events firing):

| Product type | Typical healthy median session duration | Notes on interpretation |

|---|---|---|

| Fast workflow (billing, scheduling, lightweight CRM) | 2–8 minutes | Shorter can be better if task success and retention improve. Watch for "login-and-leave" sessions. |

| Deep workflow (project management, support, ERP-lite) | 8–20 minutes | Longer sessions often correlate with adoption, but can also signal complexity and training burden. |

| Analytics/BI, monitoring, security dashboards | 10–30 minutes | Time can rise with reporting needs; pair with saved reports, alerts, and renewals. |

| Collaboration/communication | 5–25 minutes | Inflates easily due to "always open" behavior; engaged time is more reliable than raw time. |

| Consumer-style PLG utilities | 1–6 minutes | Frequency matters more; pair with stickiness and retention. |

Segment it like a founder

If you only look at one number, you'll miss the story. The segments that most often change what "good" means:

- Lifecycle: trial, week 1, month 2+ (tie to Time to Value (TTV) and Onboarding Completion Rate)

- Persona / role: admin vs end-user, creator vs viewer

- Account size / plan: small teams vs enterprise behaviors

- Acquisition channel: intentful organic vs broad paid (often very different session patterns)

- Platform: mobile sessions are structurally shorter than desktop

A practical rule: build your baseline using the segment you're currently optimizing (for many founders, that's "new users in first 7 days").

Diagnosing changes in duration

When session duration changes, founders usually want to know: "Is this product progress or a hidden problem?"

Use this decision-oriented lens:

Duration up: the three common causes

More value captured (good)

Users spend longer because they're doing more: creating, inviting teammates, running reports, shipping work.More friction (bad)

Users spend longer because it's harder to finish: confusing navigation, slower performance, broken flows.Measurement inflation (not real)

Idle timeout disabled, background pings keep sessions alive, or a tracking regression changed start/end logic.

Duration down: the three common causes

Faster task completion (good)

You removed steps, improved defaults, or added automation.Lower engagement (bad)

Users stop exploring, don't reach key features, or churn risk rises.Instrumentation truncation (not real)

Missing events, broken client tracking, or SPA route changes not captured.

A founder's diagnostic table

| Observation | Likely meaning | What to check next |

|---|---|---|

| Average up, median flat | Outliers or idle inflation | Timeout settings, distribution tail, p90, "active time" logic |

| Duration up, activation down | Confusion in onboarding | Funnel steps, rage clicks, support tickets, onboarding completion |

| Duration down, retention up | Efficiency improvement | Task completion rate, automation usage, support volume |

| Duration down, churn up | Disengagement | Feature adoption by cohort, product usage drop, Churn Reason Analysis |

| Duration changes only on one platform | Platform-specific issue | Web vs mobile performance, crashes, tracking parity |

This is where segmentation plus retention analysis becomes powerful. A clean approach is to examine session duration by signup cohort and compare against downstream retention in Cohort Analysis and Retention.

The Founder's perspective

Don't ask "Did session duration improve?" Ask "Did session duration change for the users we care about, in the phase we're fixing, and did the business outcome move too?"

Turning duration into decisions

Session duration is most valuable when you treat it as an early indicator—then validate with outcomes.

1) Improve onboarding without guessing

If you're iterating onboarding, you want users to reach first value quickly, not "hang out."

A practical pattern:

- Track median session duration for new users in their first 1–3 sessions

- Compare to onboarding outcomes like Onboarding Completion Rate and early retention

- Investigate mismatches:

- Long sessions + low completion = confusion

- Short sessions + low completion = bounce / no motivation

- Short sessions + high completion = efficient onboarding (often best-case)

Concrete example:

You add an interactive setup wizard. Median session duration for new users rises from 6 to 11 minutes. If onboarding completion rises and week-1 retention improves, that's likely healthy. If completion is flat and support tickets increase, you probably added steps without clarity.

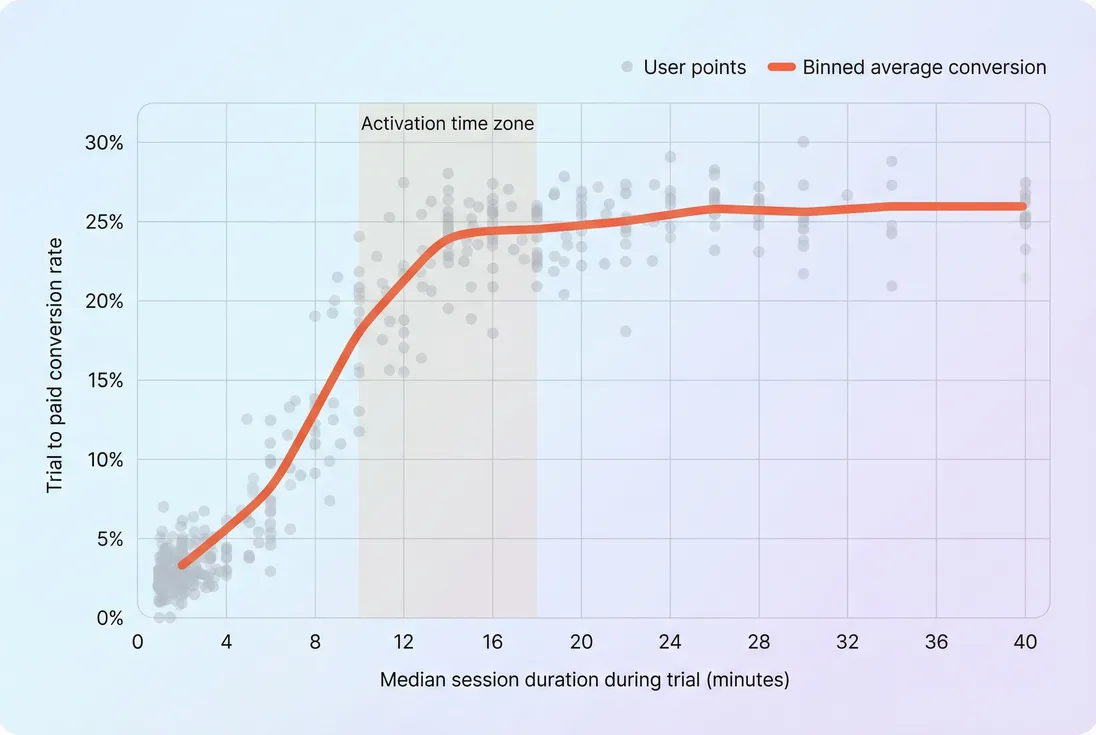

2) Find your "activation time zone"

In many SaaS products, there's a range of early engagement that correlates with conversion and retention—but only up to a point. Past that, extra time often adds less value (or signals struggle).

How to use this operationally:

- Identify the duration range where conversion lifts meaningfully.

- Then design onboarding, checklists, templates, and nudges to get users into that zone faster.

- Validate that these users also retain better (use cohorts and retention curves).

This pairs naturally with Feature Adoption Rate: time is only valuable if it includes the features that create stickiness.

3) Detect product friction after releases

Session duration is a great regression alarm when used with guardrails:

- Monitor median and p90 (not just average)

- Alert on sudden week-over-week changes

- Split by platform and key flows (onboarding, core workflow, billing)

If duration rises and activation drops right after a release, treat it like a "soft outage." Even without a full incident, slower performance and added steps show up quickly in session time.

Related metrics to sanity-check at the same time:

- Conversion Rate (trial to paid, signup to activation)

- Time to Value (TTV)

- Customer Churn Rate (lagging, but confirms impact)

- Active Users (DAU/WAU/MAU) (did usage frequency change too?)

4) Avoid using it as a vanity KPI

Some teams accidentally optimize for "time in app." That's risky:

- Users don't pay for time; they pay for outcomes.

- For many categories, the best product reduces time spent while increasing success.

Better framing:

- Optimize for task success and repeat usage, and use session duration as supporting evidence.

- If you must set a target, set it for a specific segment and job-to-be-done (e.g., "new admins in first week reach 8–12 minutes median in session 1–2 and complete setup").

The Founder's perspective

If your customer says, "Your product saves me an hour a day," and your dashboard celebrates longer sessions, you're rewarding the wrong thing.

Practical measurement checklist

If you want session duration you can trust, implement these basics:

- Set an inactivity timeout (commonly 15–30 minutes) and document it.

- Track median, average, and p90 (not just one number).

- Exclude internal users, QA, and obvious bots.

- For single-page apps, ensure route changes and key interactions emit events.

- Review the distribution monthly to catch instrumentation drift.

- Always interpret alongside activation and retention cohorts.

When session duration moves, it's telling you something. Your job is to quickly decide whether it's value, friction, or measurement—and then tie it back to outcomes that actually grow the business.

Frequently asked questions

There is no universal benchmark because "good" depends on the job your product does. Workflow SaaS can be healthy at 2–8 minutes per session if users complete tasks fast. Collaboration, analytics, and creative tools often trend 10–30 minutes. Compare against your own cohorts, segments, and outcomes, not the internet.

Not always. Longer sessions can mean deeper engagement, but they can also mean confusion, slow performance, or users getting stuck. Treat increases as a prompt to check outcomes: activation, onboarding completion, feature adoption, and retention. If duration rises while conversion or retention falls, you likely introduced friction.

Use an inactivity timeout and define "engaged time." For example, end a session after 30 minutes with no events, or measure only time between meaningful events (clicks, saves, API calls). Also review the distribution (median and percentiles). A rising average with flat median usually signals long-idle outliers.

Track both. Average (mean) is sensitive to outliers and is useful for capacity planning and "total time spent" trends. Median is more stable and better for product decisions because it reflects the typical session. Add percentiles (p75, p90) to see whether changes come from core users or a long tail.

Use it as an input to retention analysis, not a standalone KPI. Segment duration by persona, plan, and lifecycle stage (trial, first week, steady-state) and compare against retention cohorts. Look for a "good zone" where duration correlates with activation and renewal, then design onboarding and product nudges to get users there faster.