Table of contents

Onboarding completion rate

Most SaaS teams don't lose customers because the product is "bad." They lose them because customers never get far enough to experience the value they already paid for (or evaluated in a trial). Onboarding Completion Rate is the fastest, most operational way to see that problem early—before it becomes low trial conversion, weak expansion, and higher churn.

Onboarding Completion Rate is the percentage of new accounts (or users) that reach your defined onboarding "done" milestone within a set time window after signup or purchase.

What counts as "completed" onboarding

If you define completion as "clicked through a tour," you'll get a flattering number that won't predict revenue. If you define it as "fully configured enterprise SSO + first dashboard built," you'll get a number so low it's hard to act on. The goal is a definition that is observable, repeatable, and tightly linked to first value.

A practical way to define onboarding completion is:

- One setup action that removes initial friction (import data, connect integration, create first project).

- One value action that proves the product works (run first report, send first campaign, publish first page).

- Optional: One collaboration action (invite teammate, assign role) for multi-user products.

Here are example completion definitions that usually correlate with real outcomes:

| Product type | Bad completion definition | Better completion definition |

|---|---|---|

| Self-serve PLG | "Finished onboarding checklist" | "Connected data source AND created first output users return to" |

| B2B workflow tool | "Created a workspace" | "Created workspace AND completed first workflow run successfully" |

| Dev tool | "Installed SDK" | "Sent first event successfully AND queried it" |

| Sales-led enterprise | "Kickoff call held" | "First department live with weekly active usage or first business report delivered" |

If you're unsure, tie it explicitly to first value and validate it against downstream metrics like Conversion Rate, Customer Churn Rate, and Time to Value (TTV).

The Founder's perspective: Your completion definition is a strategic decision. It determines what the org optimizes: "Did they click around?" versus "Did they get value fast enough to keep paying?" Make the definition hard enough that it predicts retention, but not so hard that it becomes a services KPI.

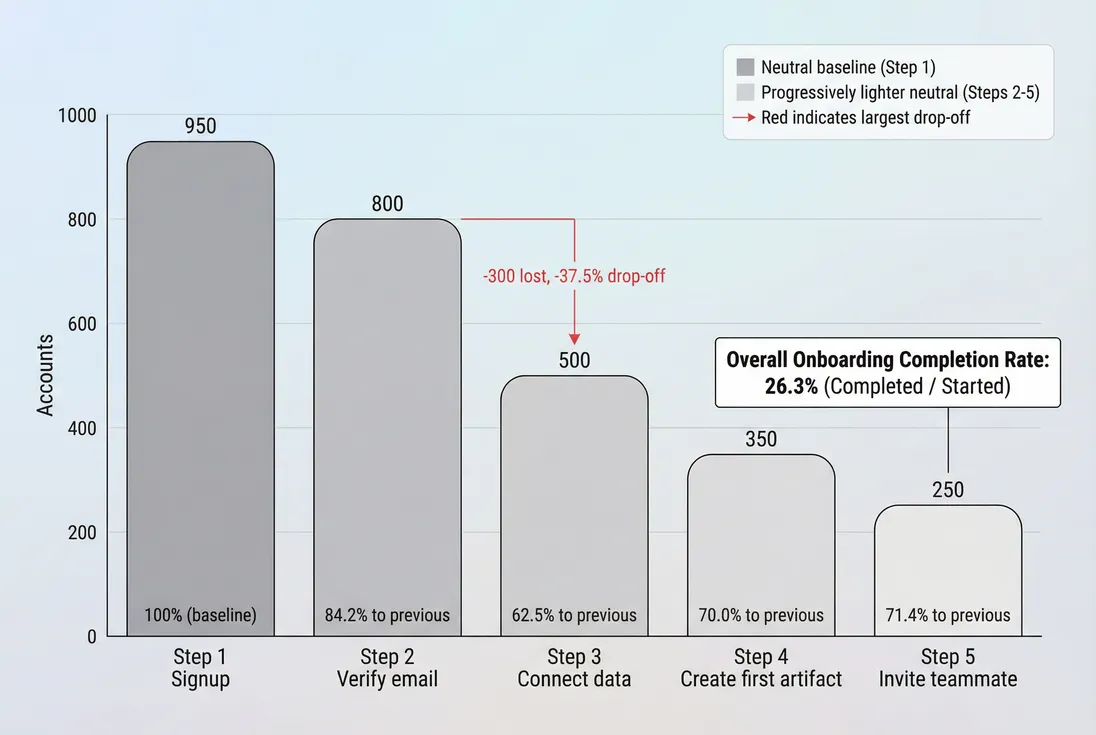

A step-by-step onboarding funnel shows where accounts drop and which step drives the biggest completion-rate gains if improved.

How to calculate it (without fooling yourself)

At its simplest:

The part founders often miss is the time window and the unit of analysis.

Choose the unit: account vs user

- Account-level completion (recommended for B2B): one completion per company/workspace. This aligns to revenue and retention.

- User-level completion: useful for diagnosing friction inside the first session, but can mislead multi-seat adoption.

A common compromise:

- Primary metric: account completion rate

- Supporting metrics: first user completion rate and additional user activation rate

Add a time window

Without a window, completion rate slowly approaches 100% as long as someone eventually finishes. That hides urgency and makes experiments look better than they are.

Practical windows:

- Free trial: N = trial length, and also track N = 1 day / 3 days to see early momentum.

- Self-serve paid: N = 7 or 14 days.

- Sales-led onboarding: N = 30 or 60 days, plus an operational milestone window (for example, "within 7 days of kickoff").

Define "started onboarding"

Be explicit. Denominator options change the story:

- All signups (broadest): exposes top-of-funnel quality and early friction.

- Activated signups (narrower): focuses on product onboarding after initial verification.

- Paid customers only: useful for CS-led onboarding, but hides acquisition/on-site issues.

If you're running a Free Trial, you usually want all trial starts in the denominator—because the business impact is trial-to-paid conversion.

What this metric reveals (and what it doesn't)

Onboarding Completion Rate is a friction and clarity meter. It answers: "Do new customers reliably reach the first moment where they say, ‘I get it'?"

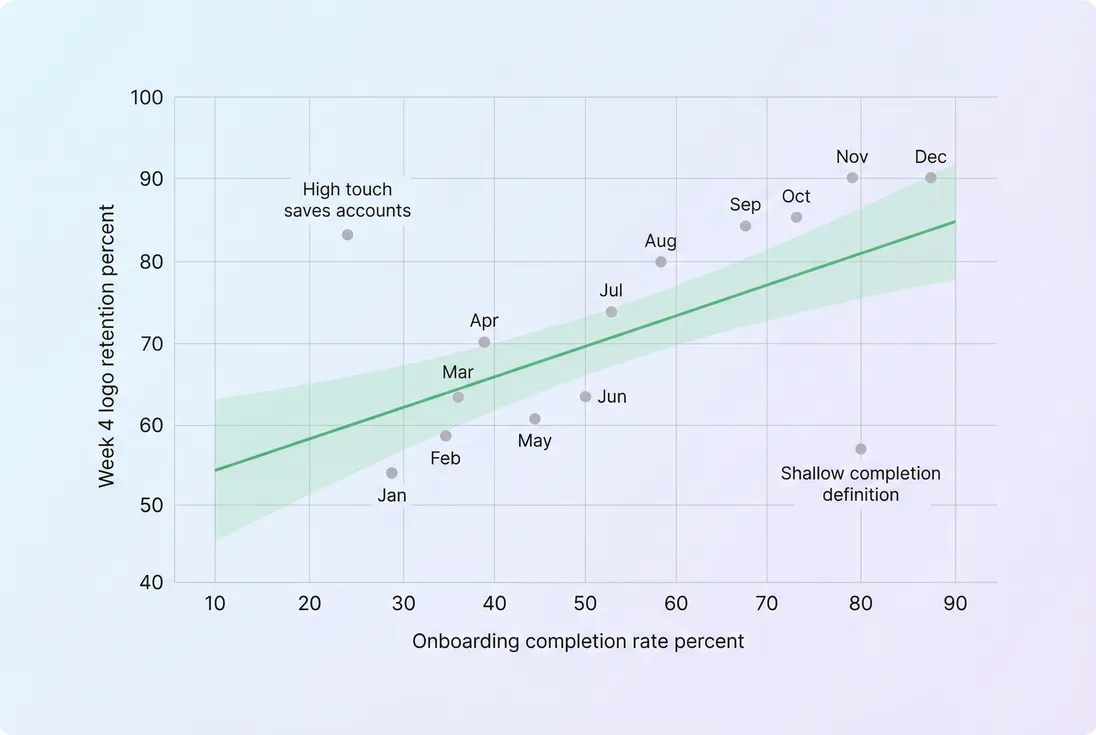

When it's healthy, you tend to see:

- Higher trial-to-paid and lead-to-customer conversion (connect to Conversion Rate)

- Faster payback (connect to CAC Payback Period)

- Better early retention and expansion later (connect to NRR (Net Revenue Retention) and GRR (Gross Revenue Retention))

What it does not guarantee:

- Long-term product-market fit

- Depth of adoption

- Expansion readiness

That's why you pair it with:

- Time to completion (median and distribution)

- Early retention (week 1 / week 4)

- Feature Adoption Rate for core features (Feature Adoption Rate)

- Cohorts to see if onboarding improvements stick (Cohort Analysis)

The Founder's perspective: If onboarding completion drops, assume revenue impact is coming—even if MRR looks fine this month. You're looking at the leading edge of next month's conversion and next quarter's churn.

Benchmarks founders can actually use

Benchmarks only matter if you compare apples to apples: same definition, same time window, same customer segment. Instead of one universal target, use ranges by onboarding complexity.

| Motion / complexity | Typical completion window | "Healthy" completion rate (rough range) |

|---|---|---|

| Self-serve, low setup (no integrations) | 1–3 days | 60–85% |

| Self-serve, moderate setup (import/integration) | 7–14 days | 40–70% |

| B2B mid-market, multi-user | 14–30 days | 30–60% |

| Enterprise, heavy security + services | 30–90 days | 20–50% |

How to use these:

- If you're below the range, you likely have blocking friction or low-intent acquisition.

- If you're above the range but retention is weak, your definition is likely too easy or value is not sustained.

Where completion rate usually breaks

Most onboarding problems cluster into a few predictable buckets. Completion rate is useful because it tells you which bucket to investigate first.

1) Acquisition mismatch (wrong customers)

If you push volume through low-intent channels, onboarding completion falls even if the product is fine.

Signals:

- Completion rate drops while traffic and signups rise.

- Big differences by channel or campaign.

- High "start" volume but low engagement after first session.

What to do:

- Segment completion by channel, persona, and plan.

- Re-check positioning and qualification (especially in Product-Led Growth motions).

2) Time-to-value is too long

If value arrives late, customers abandon halfway—even if they could finish.

Signals:

- Median time to completion rising.

- Customers complete, but only after multiple days and multiple sessions.

- Strong intent segments still struggle.

What to do:

- Redesign the path to "first win" (tie to Time to Value (TTV)).

- Defer optional setup until after the first value moment.

3) A single step is a cliff

The funnel view matters because many onboarding flows fail at one step: data import, permissions, billing, integration, or teammate invites.

Signals:

- One step has an outsized drop-off.

- Support tickets cluster around one setup task.

- A "technical" step correlates with low completion for non-technical personas.

What to do:

- Instrument step-level conversion, not just completion.

- Make that step skippable, add a fast fallback, or provide templates/sample data.

4) Multi-user adoption stalls

In team products, one user can complete onboarding, but the account never truly launches.

Signals:

- First user completes, but no invites sent.

- Usage stays single-threaded.

- Renewal risk later despite "completed onboarding."

What to do:

- Add a collaboration milestone to the completion definition (invite, role assignment).

- Track invites as a separate step in the funnel.

5) Measurement is lying

Analytics errors routinely inflate or deflate the metric.

Common pitfalls:

- Counting users instead of accounts (or vice versa) without realizing it.

- Duplicated events (retries, multiple devices).

- Backfilled completions from old accounts.

- Missing events on mobile or certain browsers.

- "Completion" triggered by viewing a page rather than succeeding at an action.

A quick validation:

- Manually inspect 20–50 "completed" accounts in your CRM/data to confirm they truly did the milestone.

- Compare cohorts: if a product change supposedly improved completion overnight by 30 points, assume instrumentation first.

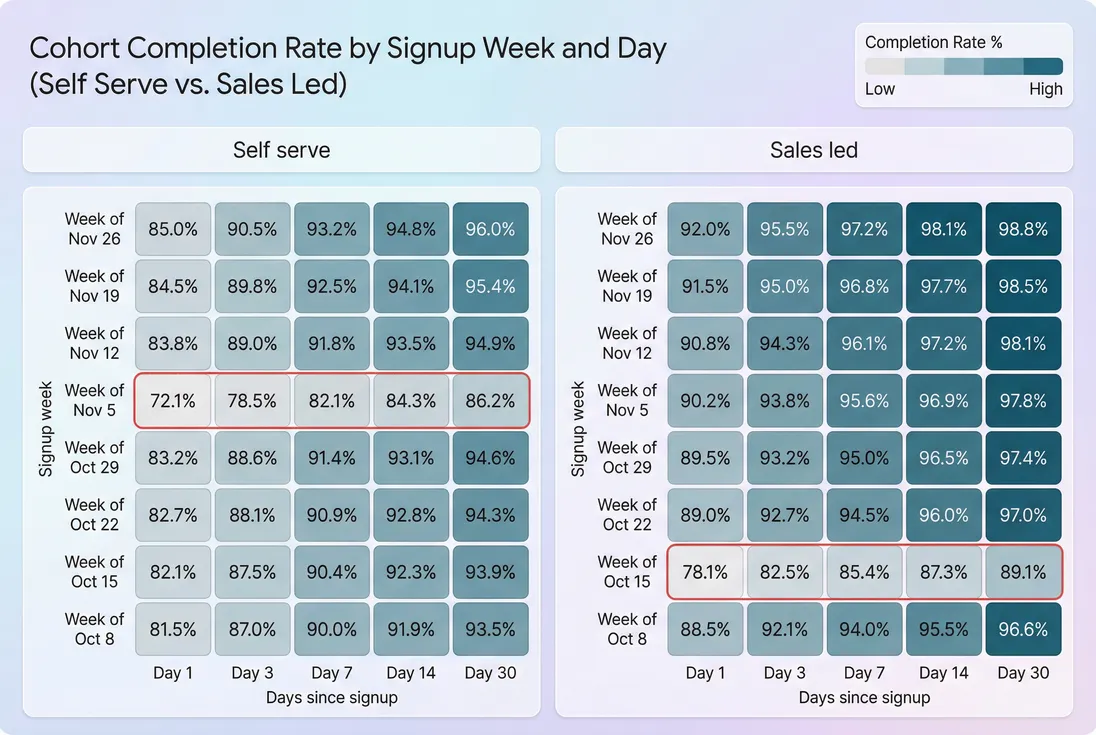

Cohort heatmaps show whether onboarding improvements persist and whether different go-to-market motions complete on different timelines.

How to interpret changes week to week

Treat this metric like a leading indicator with a short memory: it reacts quickly to product and funnel changes.

When completion rate increases

Ask "why did more accounts finish?"

Common causes with different implications:

- Real friction reduction (good): fewer steps, faster import, clearer next action.

- Better targeting (good): higher-intent customers entering onboarding.

- Looser definition (dangerous): you changed completion to something easier, inflating the metric.

- More hand-holding (mixed): CS or sales is pulling customers across the line; might not scale.

Sanity checks:

- Did time to completion also drop?

- Did trial-to-paid or early retention improve in the same cohorts?

- Did the improvement happen across segments or only one?

When completion rate decreases

Assume impact until proven otherwise. Completion rate declines often precede:

- Lower paid conversion in self-serve

- Lower activation and higher early churn (connect to Customer Churn Rate)

- Longer payback (connect to CAC Payback Period)

Triage in this order:

- Instrumentation (did events change?)

- Traffic mix (did acquisition shift?)

- Step-level funnel (where is the new drop?)

- Performance/bugs (is onboarding slower or failing?)

- Messaging (are expectations mismatched?)

The Founder's perspective: A 5-point drop in completion rate can be a bigger revenue warning than a 5-point drop in NRR—because it hits the newest customers first. Fix onboarding while the problem is still "just" an activation issue, not a churn narrative.

How founders use it to make decisions

Onboarding Completion Rate becomes powerful when it's tied to specific decisions, not just a dashboard.

Decide where product should invest

Use a step funnel + segment cuts to choose the highest-leverage work:

- If data connection is the cliff, prioritize reliability, OAuth edge cases, and clearer error states.

- If first artifact creation is the cliff, invest in templates, defaults, and better empty states.

- If invite teammate is the cliff, add role-based prompts and make solo value clearer.

A good rule: prioritize the step with the largest volume-weighted drop-off among your best-fit segments.

Decide when to use human onboarding

If completion is low for high-value segments but high for self-serve, that's a sign to:

- Add concierge onboarding for specific plans

- Standardize the kickoff path

- Measure completion with a longer window for enterprise

This is especially relevant in Sales-Led Growth where onboarding may be part product, part process.

Decide if trials are the right motion

In trial-led motions, completion rate is often the strongest predictor of conversion. If you can't get customers to complete the "first value" milestone inside the trial window, you'll fight uphill on conversion no matter how good the product is.

Use this with:

- Free Trial strategy (length, gating, nurture)

- Trial conversion analysis (a specific case of Conversion Rate)

Decide how to segment onboarding

Completion rate almost always varies by:

- Persona (ops vs developer)

- Industry (compliance-heavy vs light)

- Company size (solo vs team)

- Plan (free vs paid tiers)

- Acquisition channel

Segmentation prevents the classic mistake: "We improved onboarding" when you actually just acquired more easy-mode customers that week.

Improving onboarding completion rate (practical playbook)

You usually improve completion by removing uncertainty, removing work, or removing failure states.

Reduce uncertainty: make the next step obvious

- Replace generic checklists with a single recommended path based on persona.

- Show progress, but keep it honest (don't mark steps complete on page views).

- Put the "why" next to the "what" (what value they'll get after this step).

Reduce work: shrink the path to first value

- Start with sample data when real data connection is hard.

- Offer templates that produce an output in minutes.

- Defer non-essential settings until after the first win.

Tie improvements to time-to-value so you don't just shift work later:

- Track median time to completion alongside completion rate.

- Use Time to Value (TTV) to keep the goal customer-centered.

Reduce failure states: make hard steps robust

- Improve error messaging for imports/integrations (actionable, not cryptic).

- Add retries and resilience (especially for flaky third-party APIs).

- Provide a "skip for now" path with reminders and a clear downside.

Add human help where it multiplies

For high-ACV segments, completion rate often improves most with:

- A live kickoff to unblock integration and permissions

- A 15-minute implementation review

- Office hours for the first two weeks

But measure whether it scales:

- Does completion increase and does early retention improve?

- Or are you just pushing more accounts across a shallow milestone?

Completion rate should predict early retention; when it doesn't, your completion milestone is likely too shallow or the onboarding motion is compensating with high-touch support.

A simple operating cadence (so it drives action)

If you want this metric to change behavior, run it like an operating metric:

- Weekly: completion rate and step funnel for the last 1–2 weeks of signups (fast detection).

- Monthly: cohort view of completion within 7/14/30 days to confirm improvements persist (Cohort Analysis).

- Quarterly: validate that completion predicts retention and revenue outcomes (connect to NRR (Net Revenue Retention), GRR (Gross Revenue Retention), and CAC Payback Period).

The goal is not "make the number go up." The goal is: more customers reach value fast enough that they convert, stay, and expand.

Frequently asked questions

Good depends on complexity and your definition of completion. For simple self-serve products, 60 to 85 percent within 24 to 72 hours is common. For complex B2B onboarding with integrations and multiple users, 25 to 60 percent within 14 to 30 days can be healthy. Trend and segmentation matter more than one target.

Default to account-level for B2B, because revenue, churn, and renewals happen at the account. Track user-level as a diagnostic layer to see if the first user succeeds but the team never adopts. If you only measure user completion, you can overestimate true activation in multi-seat accounts.

Pick a window that matches how quickly customers should reach first value. For a free trial, align it to the trial length and to Time to Value. For paid onboarding, pick something operationally meaningful like 7, 14, or 30 days. Always report both completion rate and median time to completion.

Then your completion definition is probably too shallow or your product value is not sustained. Check whether completed accounts actually adopt the core feature set using Feature Adoption Rate and usage frequency. Also segment by plan and acquisition channel. Many teams mistake finishing a checklist for reaching real value.

Use it to rank onboarding bottlenecks by business impact. If drop-off happens before data import, prioritize import reliability and time. If it happens at invites, invest in team activation flows. Validate improvements by running cohorts and comparing completion rate and downstream conversion, churn, and CAC payback for those cohorts.