Table of contents

NPS (net promoter score)

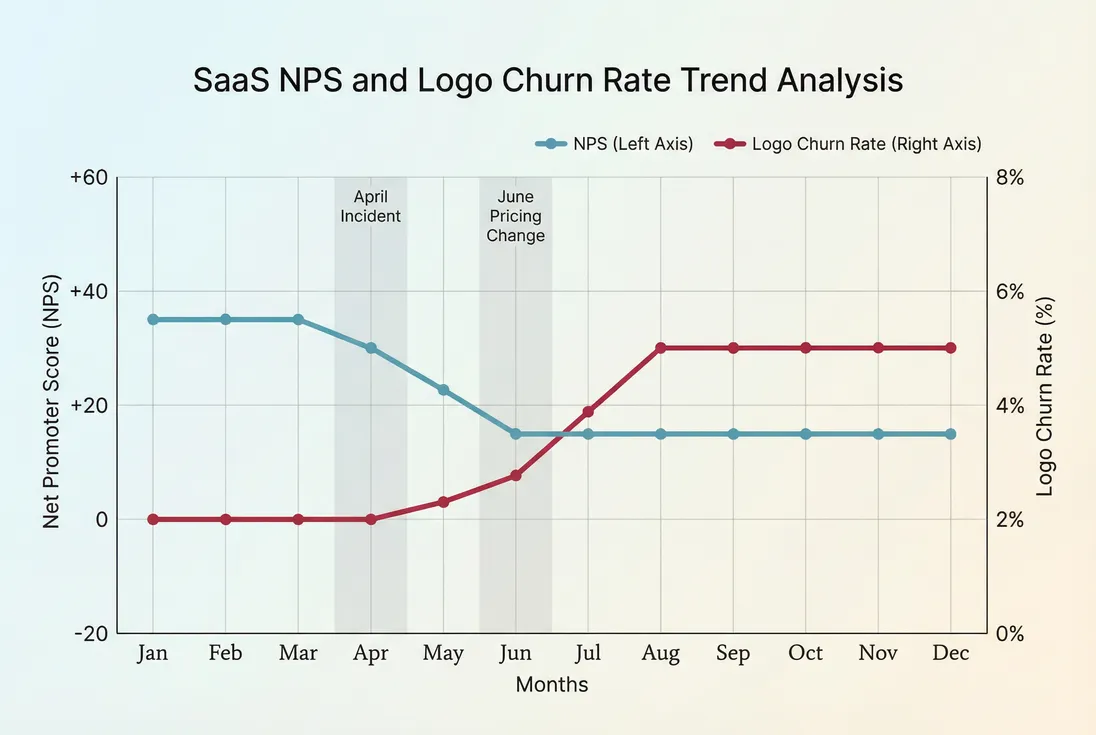

Founders care about NPS because it often shows future churn and stalled expansion before revenue dashboards do. A small rise in detractors can quietly turn into renewal resistance, pricing pushback, and negative word-of-mouth—especially in categories where trust and reliability matter.

Net Promoter Score (NPS) is a simple loyalty metric based on one question: How likely are you to recommend our product to a friend or colleague? Respondents answer from 0–10, and you convert those answers into a single score from -100 to +100.

What NPS reveals (and what it doesn't)

NPS is not a revenue metric. It's a signal—useful when you treat it like an early-warning system and pair it with retention metrics.

In practice, NPS is most valuable for:

- Churn risk detection: Rising detractors often precede increases in Logo Churn and Customer Churn Rate.

- Expansion readiness: Promoters are more likely to adopt more seats, upgrade, and champion you internally—conditions that show up later in NRR (Net Revenue Retention).

- Positioning reality check: If messaging is strong but NPS is weak, you may be overpromising or attracting the wrong customers.

NPS is weak when you use it as:

- A vanity KPI with no follow-up

- A universal benchmark across very different segments (SMB vs enterprise, self-serve vs sales-led)

- A substitute for behavioral metrics like activation and usage

The Founder's perspective

If NPS doesn't change what you do in the next two weeks—what you fix, who you call, or what you stop selling—it's not a metric. It's a scoreboard.

How NPS is calculated

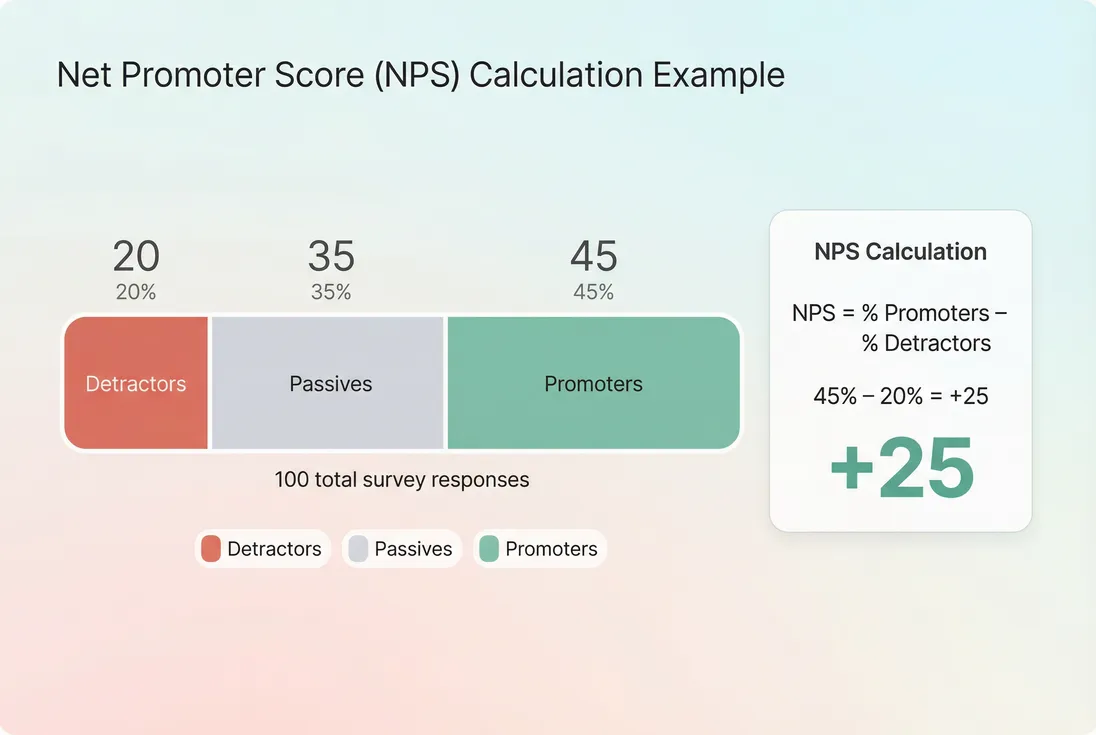

NPS is built from three groups:

- Promoters: 9–10

- Passives: 7–8

- Detractors: 0–6

Passives count toward response volume but don't directly affect the score.

The formula:

Example: 100 total responses

- 45 promoters

- 35 passives

- 20 detractors

NPS = (45% - 20%) = +25

How NPS is derived: passives don't move the score directly, so your score is mainly a tug-of-war between promoters and detractors.

A key nuance: NPS is a percentage difference

Because NPS is a difference of two percentages, it can swing quickly when:

- Response volume is low

- A single unhappy cohort suddenly responds (e.g., after an outage)

- Your sampling changes (e.g., you shift to surveying only admins)

This is why founders should look at (1) score, (2) response count, and (3) segment mix together.

When to measure NPS

There are two common NPS motions. Mixing them without labeling is a classic way to confuse your team.

Relationship NPS (rNPS)

This is the "overall" survey about the product relationship. Typical cadence:

- Quarterly for fast-changing products

- Twice per year for stable products or enterprise accounts

Best for: strategy, positioning, long-term sentiment trends.

Transactional NPS (tNPS)

This is triggered after a specific event:

- Onboarding complete

- Ticket resolved

- Renewal QBR

- Major feature shipped

Best for: diagnosing a workflow, team, or lifecycle stage.

If you're early-stage, the most actionable setup is often:

- rNPS quarterly for everyone active

- tNPS after onboarding and after high-severity support cases

The Founder's perspective

If you have to pick one, start with onboarding-triggered NPS. Founders usually learn faster from "Why did a new customer become a detractor in week two?" than from a quarterly average that hides the cause.

What moves NPS in SaaS

NPS is a summary of many small product and service experiences. In SaaS, the most common drivers are predictable—and tied to whether the customer is getting value with low friction.

1) Time-to-value and activation

If customers don't reach value quickly, you'll see:

- More 0–6 scores (confusion, regret)

- More 7–8 scores (uncertain value)

- Fewer 9–10 scores (no "aha")

This is where linking NPS to onboarding metrics helps, like Onboarding Completion Rate and Time to Value (TTV).

Founder takeaway: Improvements in activation often lift NPS and reduce early churn—but the NPS lift may appear first.

2) Reliability, support quality, and trust

Even strong products lose promoters when:

- Uptime is inconsistent (see Uptime and SLA)

- Support is slow or repetitive

- Bugs break core workflows

A single incident can temporarily increase detractors. The important part is whether detractors stay elevated after the incident is resolved.

3) Pricing and packaging surprises

Pricing changes can hurt NPS even when churn doesn't move immediately. Watch for:

- NPS drop concentrated in specific plans

- NPS drop among older cohorts (grandfathered pricing removed)

- More "value" complaints in verbatims

To interpret this well, pair sentiment with retention and revenue metrics like ARPA (Average Revenue Per Account) and ASP (Average Selling Price).

4) Wrong customers (ICP mismatch)

A common pattern: growth looks fine, but NPS deteriorates as you broaden targeting. That's often an ICP problem, not a product problem.

Signs:

- NPS falls primarily in a new acquisition channel

- Detractors cite missing features that your roadmap shouldn't chase

- Passives dominate because outcomes aren't aligned with your product's strengths

This is one reason NPS should be segmented by acquisition source, plan, and industry whenever possible.

How to interpret changes (without overreacting)

Founders usually ask: "NPS moved by 8 points—should I panic?" The answer depends on where the move came from.

Decompose the change

An NPS drop can happen in three ways:

- More detractors (worst; churn risk rises)

- Fewer promoters (growth/referral potential weakens)

- Both (often a major product or reliability issue)

In operational terms:

- Detractors rising → prioritize retention work, proactive outreach, and fixing the root cause.

- Promoters falling → value may be plateauing; invest in "power user" outcomes, not just bug fixes.

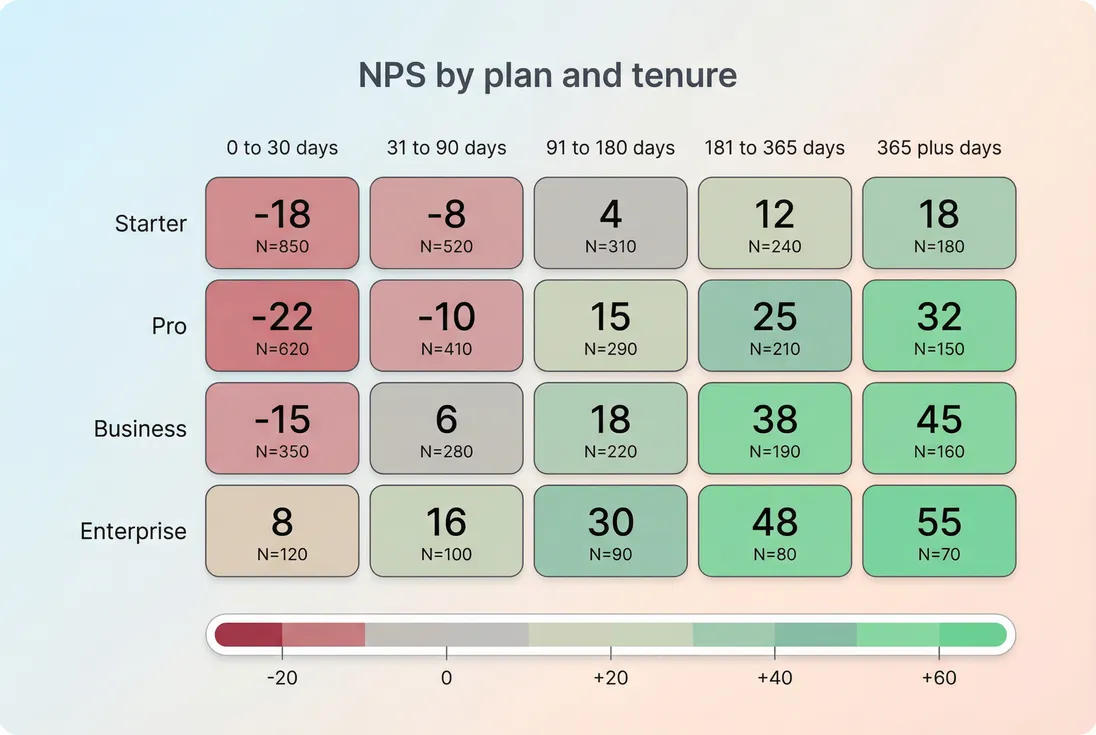

Segment before you decide

Overall NPS can be stable while one segment is collapsing. Minimum segments most founders should review:

- Plan or tier

- Tenure (0–30 days, 31–180, 180+)

- Company size (SMB vs mid-market vs enterprise)

- Region (if support coverage varies)

- Primary use case (if you serve multiple)

Segmented NPS prevents false alarms: a flat overall score can hide a serious onboarding problem in one plan or cohort.

Tie NPS movement to retention reality

NPS should not be managed in isolation. Use it to form a hypothesis, then validate against:

- Cohort Analysis (are newer cohorts retaining worse?)

- GRR (Gross Revenue Retention) (are existing customers shrinking/churning?)

- Net MRR Churn Rate (are expansion gains being offset?)

If NPS drops but retention is stable, you may be seeing:

- Temporary noise (incident week)

- A vocal segment with low revenue weight

- Early sentiment that hasn't yet hit renewals (common in annual plans)

If NPS drops and retention also worsens, treat NPS as confirmation—move fast.

What is a "good" NPS in SaaS?

Benchmarks are widely published, widely misused, and often not comparable (different survey timing, different sampling, different definitions). Treat external benchmarks as context, not targets.

A practical way to think about it:

| NPS range | Typical interpretation | What founders do next |

|---|---|---|

| < 0 | More detractors than promoters | Stop scaling acquisition; fix core experience and support; review ICP |

| 0 to 20 | Neutral to mildly positive | Improve onboarding and reliability; invest in reducing friction |

| 20 to 50 | Strong for many B2B SaaS | Systematize advocacy, case studies, referrals; protect reliability |

| 50+ | Exceptional in many contexts | Don't assume you're "done"; validate by segment and track sustainability |

Two important founder rules:

- Trend beats level. A steady climb from +5 to +25 is often more meaningful than being stuck at +40 for a year.

- Distribution beats average. 20% detractors is a different world than 5% detractors, even if the final score looks "fine."

The Founder's perspective

Don't celebrate "high NPS" if you're still seeing rising Logo Churn in the cohorts that matter. NPS is a story you tell yourself; churn is the story customers tell with their wallets.

How founders use NPS to drive decisions

NPS becomes powerful when you treat it like an operating loop:

Step 1: Collect the score and the why

Always pair the 0–10 question with a follow-up:

- "What's the primary reason for your score?"

- Optional: "What would you change to make this a 9 or 10?"

The score tells you who to look at; the verbatims tell you what to fix.

Step 2: Create a simple taxonomy

You don't need an academic model. Founders move faster with 8–12 consistent tags, such as:

- Onboarding confusion

- Missing integration

- Performance or reliability

- Reporting gaps

- Billing or pricing

- Support responsiveness

- Permissions or admin controls

- UX friction

Then track tag frequency by segment over time. This is where NPS connects naturally to Churn Reason Analysis: the tags you see in detractor feedback often match the reasons you'll later hear in cancellation flows.

Step 3: Close the loop (especially with detractors)

Operationally, treat detractors as a queue:

- Respond quickly (same week)

- Confirm the issue and expected timeline

- Offer a workaround if possible

- Document outcomes (resolved, pending, churned anyway)

This is less about "saving NPS" and more about reducing preventable churn.

Step 4: Validate impact with retention metrics

After you ship fixes aimed at top NPS drivers, look for:

- Reduced detractor rate in the impacted segment

- Better retention in the next cohort window (use Cohort Analysis)

- Improvement in Customer Health Score if you use it operationally

- Downstream lift in NRR (Net Revenue Retention) for accounts where expansion depends on advocacy

NPS often moves before churn: a sustained rise in detractors can foreshadow renewal problems a month or two later.

Common ways NPS breaks

These are the failure modes that make NPS misleading—and how to prevent them.

Sampling drift

If you change who receives the survey (admins vs end users, power users vs all users), your NPS can move even if customer sentiment didn't.

Fix: Lock the sampling rule and report response counts and segment mix alongside the score.

Incentivized responses

Discounts or gifts can inflate promoter rates temporarily and reduce honest feedback.

Fix: If you must incentivize, use neutral incentives (e.g., donation regardless of score) and keep it consistent.

Over-indexing on passives

Passives don't affect NPS directly, but they matter strategically: passives often renew but don't advocate, and they can convert to detractors after a couple of bad experiences.

Fix: Track passive share as a secondary KPI. A stable NPS with rising passives can still be a warning sign.

Treating NPS like a performance review

If teams fear consequences, they will optimize the score (timing, selection) rather than improve the product.

Fix: Use NPS as an improvement tool: tie it to learning and follow-up, not quotas.

A practical NPS operating cadence

If you want a simple founder-friendly cadence that doesn't create survey fatigue:

- Weekly: Review new detractors; assign follow-ups; tag root causes.

- Monthly: Review NPS by plan, tenure, and use case; compare to churn and support drivers.

- Quarterly: Run relationship NPS; pick the top two detractor themes to address; publish "what we heard / what we're doing" internally.

Pair NPS reviews with retention reviews—especially GRR (Gross Revenue Retention) and NRR (Net Revenue Retention)—so sentiment and financial reality stay connected.

Quick decision guide

Use NPS to decide where to focus, not to declare success.

- If detractors rise in a high-ARR segment: prioritize retention work immediately.

- If promoters fall after a pricing change: revisit packaging, value communication, and rollout sequencing.

- If NPS is high but churn is rising: your survey may be sampling the happiest users, not the at-risk buyers.

- If NPS is low but improving: validate with cohorts; you may be fixing the right things.

When NPS becomes a consistent feedback-to-action loop, it stops being a vanity number and starts functioning like a lightweight early-warning system for retention.

Frequently asked questions

For B2B SaaS, many healthy products land in the +20 to +50 range, but the "good" bar depends on category, price point, and buyer type. Use competitors and your own trend as the benchmark. A stable, rising NPS that aligns with improving retention is more meaningful than a single score.

Treat small samples as directional. If you only get a few dozen responses, a handful of detractors can swing the score. Aim for consistent monthly volume and stable sampling across segments. More important than a strict minimum is measuring the same way each time and tracking segment-level movement, not just the overall score.

NPS is best for relationship-level sentiment and referral intent. CSAT is better for specific interactions (onboarding, support tickets). CES is strongest when you suspect friction in a workflow. Many SaaS teams use all three: NPS quarterly for strategy, CSAT after key events, and CES to diagnose product and support effort drivers.

Most SaaS companies run "relationship NPS" quarterly or biannually and avoid over-surveying. If your product is changing rapidly, quarterly can be justified, but only if you close the loop on feedback. Also consider event-triggered NPS at key milestones like post-onboarding or after a renewal conversation for targeted insights.

First, verify it's not a sampling or timing shift (different segments responded, fewer responses, outage week). Then break the change down by segment and by verbatim themes. Treat detractors as a retention-risk queue and run fast remediation. Finally, validate impact by tracking downstream changes in churn and expansion, not just a recovered NPS.