Table of contents

Feature adoption rate

Shipping features does not create revenue. Customers using features does. Feature adoption rate is the fastest way to tell whether a launch is actually changing behavior—or just adding complexity to your product.

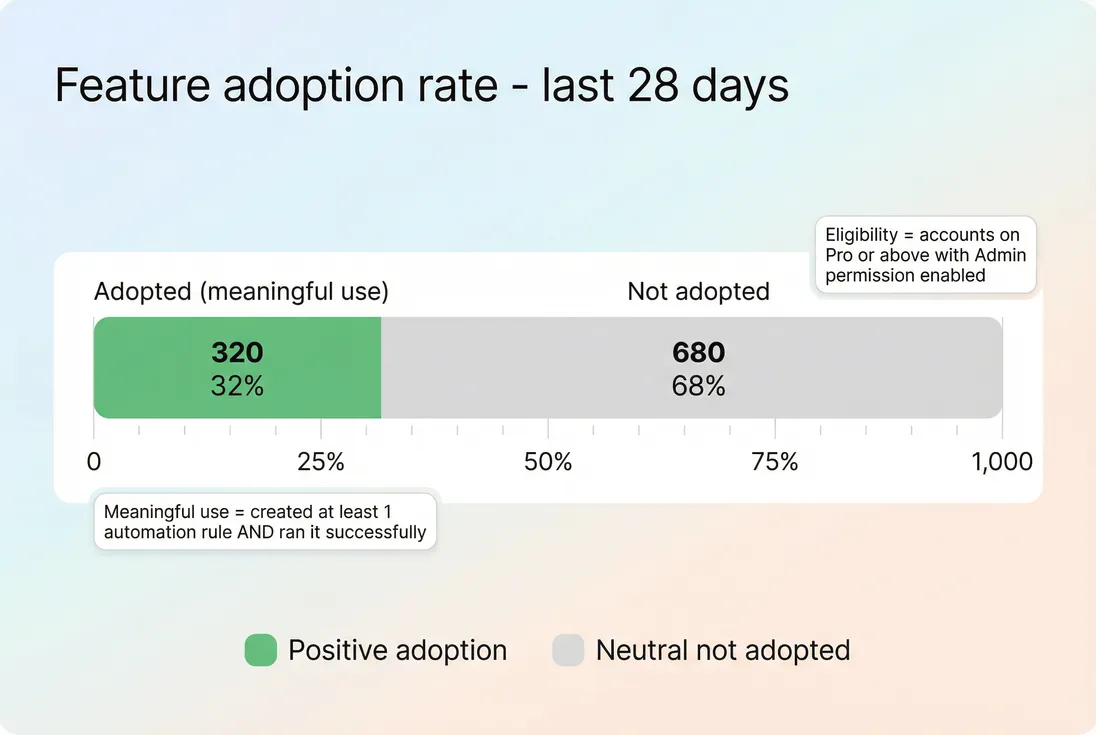

Feature adoption rate is the percentage of eligible customers (accounts or users) who meaningfully use a specific feature during a defined time period.

Adoption only becomes actionable when you define both eligibility and meaningful use—otherwise the percentage can look "good" while customers still fail to get value.

What this metric reveals

Founders use feature adoption rate to answer one core question: Is this feature becoming part of the customer's workflow?

That turns into practical decisions:

- Roadmap: Double down, iterate, or sunset.

- Onboarding: What to teach first, and to whom.

- Packaging: Which features belong in which plans, and what is actually "premium."

- Retention work: Which behaviors predict renewal risk or expansion potential.

Adoption is also a sanity check on your internal narratives. If the team believes a feature is "the differentiator," adoption rate tells you whether customers agree.

The Founder's perspective

If a feature is strategically important but adoption is flat, the problem is almost never "we need more customers." It is usually one of: wrong audience, unclear value, too much friction, or the feature is not where the workflow actually happens.

Who should adopt it

Before you calculate anything, decide the population who should reasonably use the feature. This is the most common source of bad adoption metrics.

Define the eligible population

Eligibility typically depends on:

- Plan / packaging (Free vs Pro vs Enterprise)

- Permissions (Admin-only, role-gated features)

- Use case fit (Only relevant to teams running integrations, only relevant to multi-seat accounts)

- Lifecycle stage (Trial, onboarding, active customer, renewal window)

If you skip this and use all customers as the denominator, adoption will look artificially low and you will chase the wrong fixes.

A practical eligibility statement looks like:

- "Active paying accounts on Pro+ with at least 3 seats and integrations enabled."

- "Active users who have permission to create reports."

Decide the unit: account vs user

Different products should default differently.

| Measurement unit | Best when | Example adoption definition |

|---|---|---|

| Account-level adoption | Value is realized at the company level | "Account has at least one user who ran the workflow successfully in last 28 days." |

| User-level adoption | Usage is individual and seat-based | "User created at least 3 items with feature in last 14 days." |

If you sell per-seat, you often care about both: account adoption predicts retention; user adoption predicts expansion via seat growth and stickiness (see DAU/MAU Ratio (Stickiness)).

How to calculate it

The simplest calculation is:

That seems straightforward until you define "used," "eligible," and "active."

Choose a time window that matches behavior

Use a window aligned to the feature's natural cadence:

- Daily/weekly workflow features: 7 or 28 days

- Monthly planning features: 30, 60, or 90 days

- Quarterly compliance features: 90 days (sometimes longer)

Avoid "ever used" for operational decisions. It usually overstates adoption and hides regressions.

Define "meaningful use" (not clicks)

A click is discovery, not adoption.

Good meaningful-use definitions:

- Created something and completed the workflow successfully.

- Repeated use (e.g., 2+ times) if one-time setup is common.

- Reached an outcome tied to value (export completed, alert delivered, automation ran).

A useful pattern is two-stage adoption:

- Activation: first successful use

- Habit: repeated successful use

You can express habit adoption as:

Where N should reflect real value (often 2–5, not 20).

Avoid denominator traps

Common denominator mistakes:

- Including churned/inactive customers

- Including customers who cannot access the feature (wrong plan, missing permissions)

- Counting trials the same as paying customers when their behavior is fundamentally different

If you're already tracking activation or onboarding, pair adoption with Onboarding Completion Rate and Time to Value (TTV) so you can separate "they never got there" from "they got there and bounced."

Is adoption improving over time

A single adoption number is a snapshot. Founders need to know whether adoption is improving for new customers and for existing customers.

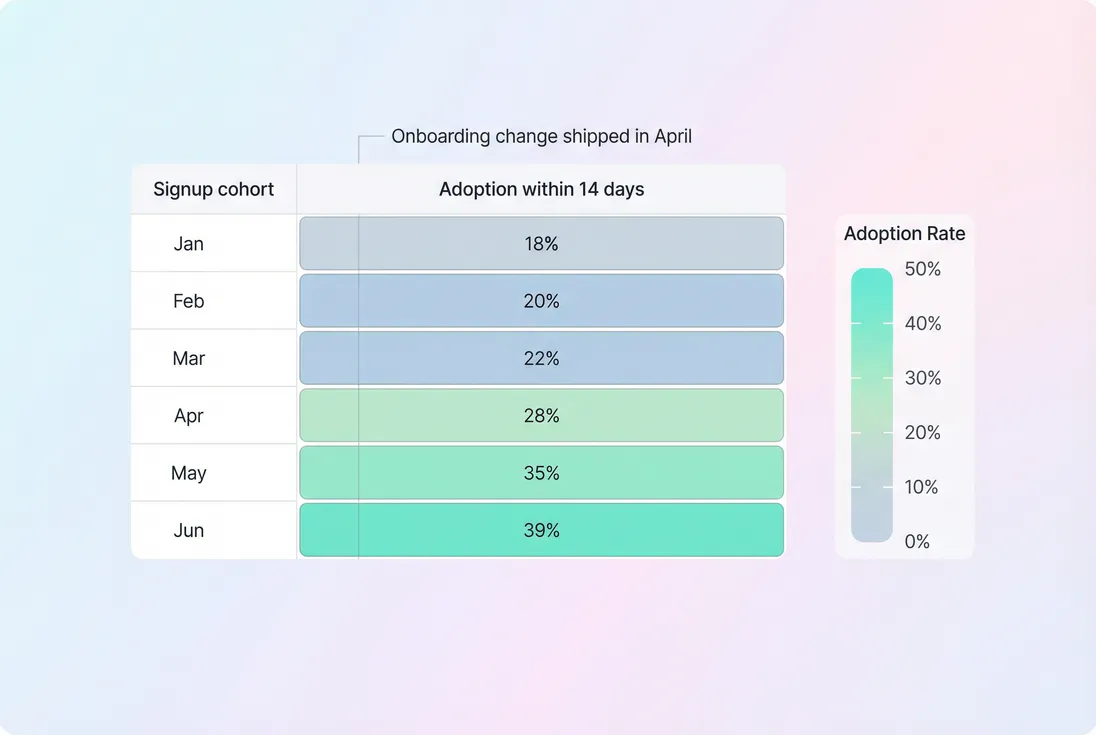

Use cohort adoption to see real change

When you change onboarding, documentation, pricing, or UX, overall adoption can lag because your customer base includes old cohorts. Cohorts reveal whether the change is working.

Cohorts isolate whether adoption improvements are real (new customers behaving differently) versus noise from an older customer base.

This answers the founder question: "Did the change we shipped actually move customer behavior?" If cohorts after April jump while earlier cohorts stay flat, your onboarding change likely worked—and your next job is to backport the change to existing customers.

Track time-to-first-use

Adoption rate can stay flat while customers adopt faster—which matters for conversion and retention.

A simple companion metric is "adopted within X days":

In practice, X is often 7 or 14 days. This is especially useful for PLG motions where early value predicts conversion (see Free Trial and Product-Led Growth).

What drives adoption up or down

Adoption changes are rarely mysterious if you map them to a few levers. When adoption moves, look in this order:

1) Discoverability and sequencing

If the feature is hard to find or appears too early, customers skip it.

Typical fixes:

- Move feature entry points closer to the moment of need

- Add contextual prompts after a prerequisite action

- Reduce "blank state" confusion with a clear first step

2) Friction and failure rate

Many "low adoption" features are actually "high attempt, low success."

Instrument:

- Starts vs successful completions

- Errors, permission denials, missing prerequisites

- Time to completion

If starts are high but successes are low, adoption will look low for the right reason: customers tried and failed. The fix is reliability, UX, and defaults—not more education.

3) Role and permission mismatch

Account-level features often fail because the wrong person sees them.

Example:

- Individual contributors want the feature, but only admins can enable it.

- Admins can enable it, but don't feel the pain that motivates adoption.

This is where account-level and user-level adoption together are clarifying: user-level desire without account-level enablement usually signals a permissions or champion problem.

4) Packaging and price signals

If a feature is positioned as premium but drives core value, adoption will be constrained by plan mix.

This connects to revenue metrics:

- If the feature is an upgrade driver, you should see higher Expansion MRR among adopters (with proper segmentation).

- If it is required to retain, you should see better NRR (Net Revenue Retention) and lower churn among adopters.

If neither is true, packaging may be misaligned—or the feature is not delivering value.

The Founder's perspective

Adoption is a product signal, not a vanity metric. When it moves, assume your customers are reacting to something you changed: UX, reliability, onboarding, permissions, or plan boundaries. The right response is to identify the lever—not to demand a higher number.

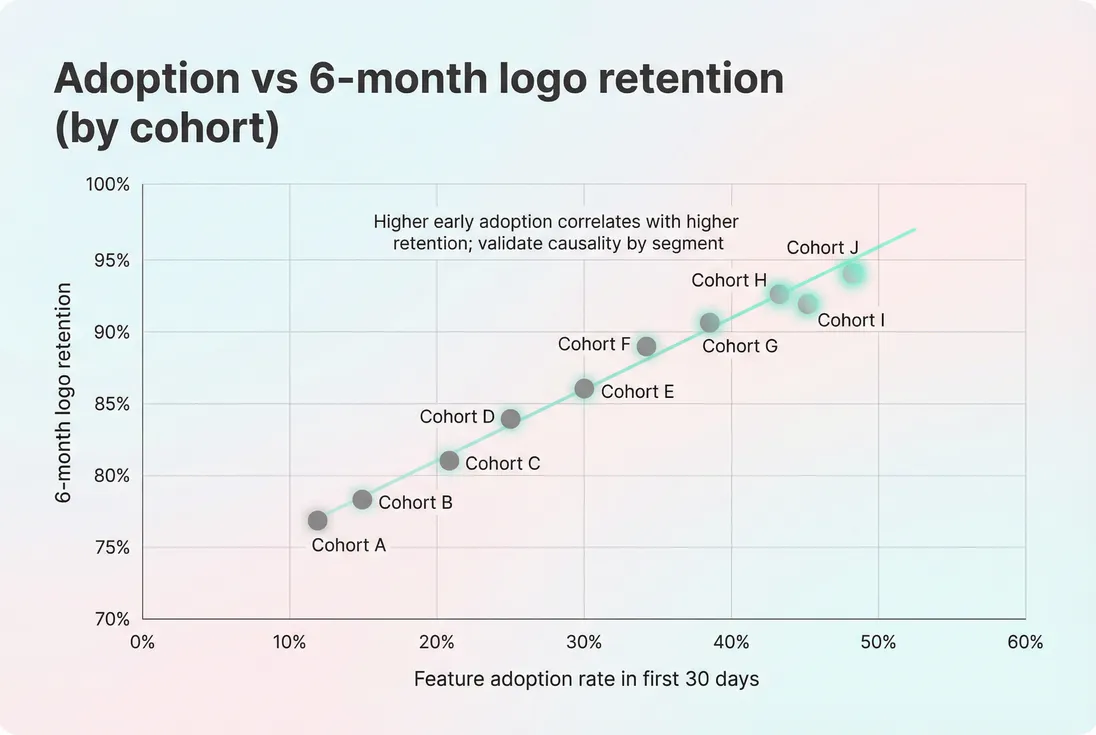

Does adoption translate into retention or expansion

Adoption is only "good" if it predicts something you care about: retention, expansion, or lower support burden.

Compare adopters vs non-adopters

A practical approach:

- Define adoption for a feature in the first 30 days of a customer's life.

- Split customers into adopters and non-adopters.

- Compare:

- Logo retention (see Logo Churn)

- Revenue retention (see GRR (Gross Revenue Retention) and NRR (Net Revenue Retention))

- Expansion behavior (see Expansion MRR)

- Repeat by cohort and by segment (plan, company size, use case).

If adopters retain materially better, the feature is likely part of your product's "value engine." If there is no difference, the feature may be:

- Nice-to-have

- Only valuable for a narrow segment

- Adopted superficially (your meaningful-use definition is too weak)

Beware selection bias

Power users adopt more features and retain more, even if the feature is not causal.

To reduce self-deception:

- Compare within the same segment (same plan, similar size, similar acquisition channel)

- Look for adoption changes driven by product changes (cohort step-changes)

- When possible, use controlled rollouts (feature flags) to create quasi-experimental comparisons

Adoption becomes strategically valuable when it predicts retention or expansion—otherwise it is just activity.

What to do when adoption changes

Adoption rate is most useful as a weekly operating metric with clear "if this, then that" responses.

When adoption drops

Treat drops as an investigation with a short checklist:

- Instrumentation changed? Event names, tracking coverage, or pipelines.

- Eligibility changed? Plan changes, permission defaults, new segments added to denominator.

- Feature reliability changed? Error rates, performance, integrations failing.

- UX path changed? Navigation changes, removed entry points, new workflow sequence.

If the drop is real, act based on where the funnel breaks:

- Low discovery: improve entry points, education, contextual prompts.

- High starts, low success: fix UX, defaults, or reliability first.

- High success, low repeat: feature value is unclear, or the workflow is too heavy.

- Repeat high, but only in one segment: tighten ICP targeting or reposition the feature for that segment.

When adoption rises

Rises can be good—or misleading.

Validate:

- Are customers completing meaningful outcomes, not just starting?

- Did adoption rise because you forced the flow (e.g., modal blocking)?

- Did support tickets increase (new complexity) or decrease (real value)?

A healthy rise typically comes with:

- Faster time-to-value (see Time to Value (TTV))

- Better retention cohorts (see Cohort Analysis)

- Higher expansion behavior for the right segments (see Expansion MRR)

The Founder's perspective

The best adoption wins look boring: fewer steps, fewer failures, clearer defaults. If adoption only increases when you nag users, you are manufacturing clicks—not building a workflow customers want.

Benchmarks founders can actually use

There is no universal "good" adoption rate. But you can benchmark by feature category and how broadly applicable it is.

Practical ranges (for eligible active accounts)

| Feature type | Typical adoption range | What "good" means |

|---|---|---|

| Core workflow | 40–70% | Most eligible customers rely on it regularly. |

| Secondary workflow | 20–50% | Strong for a subset; often segment-dependent. |

| Power feature | 10–30% | High-value for advanced users; should predict retention/expansion. |

| Admin/compliance | 5–25% | Low is fine if only some accounts require it; measure success rate. |

| One-time setup | 30–80% (ever), lower (active) | Track setup completion separately from ongoing usage. |

Use these ranges to sanity check, not to set goals.

How to set a target without guessing

Targets should be tied to a decision:

- If the feature is a retention driver, target adoption in the segment with the highest churn risk.

- If the feature is an upgrade driver, target adoption among accounts at the expansion threshold (e.g., nearing seat or usage limits).

- If the feature is a differentiator, target adoption during onboarding (adopted within 7–14 days).

A good internal target statement is:

- "Increase adoption within 14 days from 22% to 35% for ICP accounts on Pro, without increasing time-to-complete."

That forces you to keep the metric honest.

Where feature adoption rate breaks

These are the failure modes that create false confidence or false alarms.

"Ever used" hides regressions

A customer who tried the feature once six months ago should not count as adopted today. Use rolling windows for "active adoption" and cohorts for early adoption.

One user can mask a whole account

Account adoption can read high when a single champion uses it but no one else does. Track a "breadth" metric:

- "At least 3 users in the account used the feature in last 28 days" (when appropriate)

Forced flows inflate adoption

If you insert the feature into a required workflow step, adoption may spike while satisfaction drops. Pair adoption with outcome measures (completion, success, time saved) and qualitative signals like Churn Reason Analysis.

Eligibility creep changes the denominator

If you expand eligibility to new plans or segments, adoption rate can drop even while total adopted customers increases. Always report:

- adoption rate

- eligible count

- adopted count

So you can tell whether the business is actually improving.

How founders operationalize it

A lightweight cadence that works:

- Weekly: Feature adoption rate (active window), eligible count, adopted count, success rate

- Monthly: Cohort adoption within 14/30 days for new customers

- Quarterly: Adoption vs retention/expansion analysis by segment

Tie ownership to the lever:

- Product owns discoverability, UX, reliability, defaults

- Growth/PLG owns onboarding sequencing and education

- CS owns enablement and champion mapping for high-value accounts

If you do this consistently, feature adoption rate becomes a clear bridge between "what we shipped" and "what moved the business."

Frequently asked questions

Start by defining eligibility and meaningful use. Eligibility is who should reasonably use the feature given plan, permissions, and workflow. Meaningful use is a value-producing action, not a click. Add a time window like last 28 days. If you cannot explain the definition to Sales and Support, it is too fuzzy.

It depends on feature type and audience. Core workflow features might reach 40 to 70 percent of eligible active accounts in 30 days. Power features often land around 10 to 30 percent. Admin or compliance features can be much lower but still critical. The useful benchmark is your own trend by cohort after comparable launches.

Use account-level adoption when value is realized at the company level and you care about retention and expansion. Use user-level adoption when seats are the product and usage is individual. Many teams track both: account adoption to guide Customer Success plays, and user adoption to guide onboarding, permissions, and in-app education.

Pick a window that matches the natural usage cadence. Daily workflow features can use 7 or 28 days. Monthly planning features might need 60 or 90 days. Avoid lifetime ever-used for decision-making because it hides churned behavior. If you are testing onboarding changes, use adoption within 7 to 14 days of signup as a sharper signal.

First verify that adoption is meaningful and not driven by forced UI placement or one-time setup. Then segment by ideal customer profile, plan, and use case. If adopters still churn at similar rates, the feature may be table stakes, poorly tied to value, or relevant only to a subset. Pair adoption with [Cohort Analysis](/academy/cohort-analysis/) and retention metrics to validate impact.