Table of contents

CSAT (customer satisfaction score)

Founders care about CSAT because it's one of the fastest signals that something in the customer experience is about to cost you revenue—through churn, downgrades, delayed expansions, or noisy support that drags your team.

CSAT (Customer Satisfaction Score) is a simple metric that measures how satisfied customers are with a specific interaction, experience, or time period—usually captured through a short survey (often a 1–5 rating) right after an event like a support resolution or onboarding milestone.

What CSAT reveals in practice

CSAT is most useful when you treat it as an operational quality metric, not a brand vanity metric. It answers: Did we meet expectations in the moment that mattered?

When CSAT is implemented well, it helps you:

- Catch experience issues before you see them in Logo Churn or Net MRR Churn Rate

- Identify whether the problem is product value, support execution, billing friction, or onboarding clarity

- Prioritize fixes by segment (high ARPA accounts vs. long-tail) and by touchpoint

The Founder's perspective

I do not use CSAT to prove we are "customer-centric." I use it to find which part of the machine is leaking: onboarding, reliability, support process, or pricing/billing. Then I decide whether to invest in product fixes, support staffing, or clearer expectations.

How CSAT is calculated

Most SaaS teams calculate CSAT using a 1–5 (or 1–7) scale question like:

- "How satisfied are you with the support you received?"

- "How satisfied are you with onboarding so far?"

Then you define what counts as "satisfied" (commonly 4–5 on a 5-point scale).

Example: 92 customers respond. 78 choose 4 or 5. CSAT = 78 / 92 × 100 = 84.8%.

Alternative: normalized average score

Some teams report CSAT as a normalized percentage based on the average rating. This is less common for support, but sometimes used for in-app surveys.

Be careful: these two approaches can move differently. If you change the method midstream, you break trend comparability.

The two definitions you must lock down

To keep CSAT decision-grade, document these two choices and keep them consistent:

- Threshold: What ratings count as "satisfied" (for example 4–5)

- Moment: What event triggers the survey (ticket closed, day 7 of trial, after renewal call)

If you change either one, treat it like redefining a financial metric: annotate it and reset baselines.

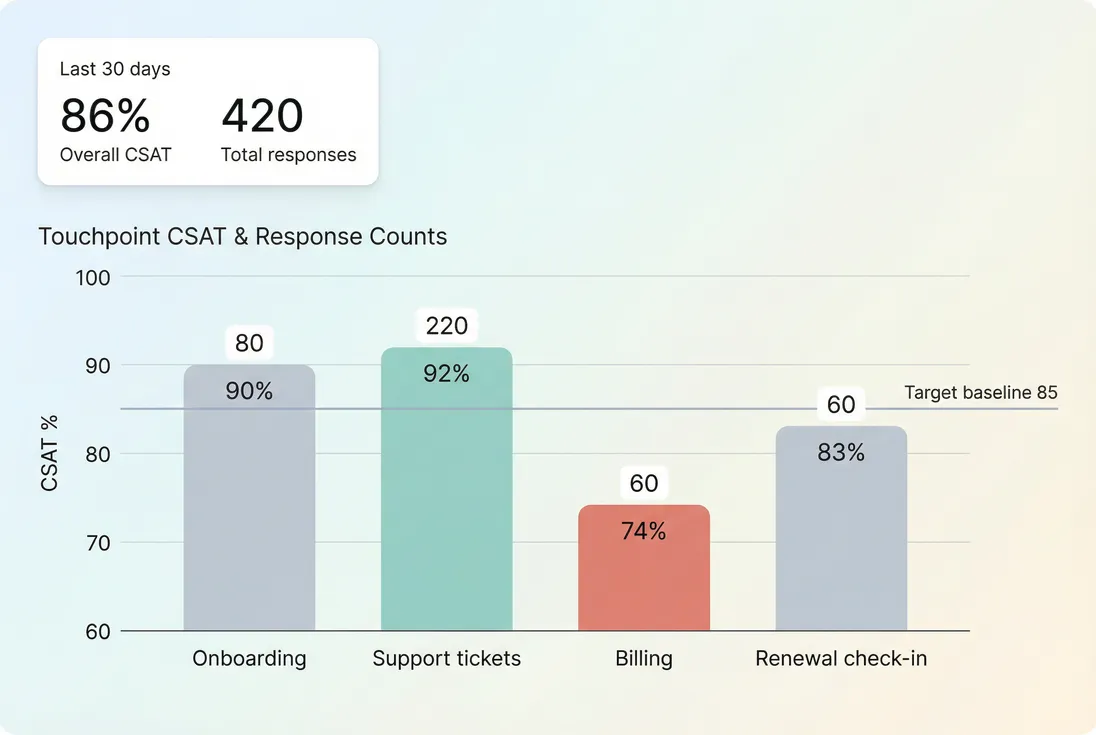

Where founders should measure CSAT

CSAT is strongest when tied to a clear "job to be done" moment. Avoid a generic "How satisfied are you with our product?" unless you have a specific reason and a stable sampling plan.

Here are the highest-leverage SaaS touchpoints:

Support ticket CSAT

This is the classic use case. It's closest to execution quality: response time, clarity, empathy, and whether the issue was actually resolved.

Use it to manage:

- Support staffing and training

- Escalation rules

- QA and knowledge base gaps

But don't confuse "support happiness" with "product value." A customer can be thrilled with support while still churning because the product doesn't deliver ROI.

Onboarding CSAT

Measure satisfaction after milestones, not after time. Tie it to moments like:

- First integration connected

- First report built

- First workflow automated

This pairs naturally with Time to Value (TTV) and Onboarding Completion Rate. If onboarding CSAT dips, your activation path is probably unclear or too complex.

Billing and payment CSAT

Billing issues create outsized frustration because they feel unfair: failed payments, confusing invoices, proration surprises, and refund delays.

If billing CSAT drops, check:

- Failed payment rates (often tied to Involuntary Churn)

- Refund volume and reasons (Refunds in SaaS)

- Accounts receivable delays in annual invoicing (Accounts Receivable (AR) Aging)

- VAT and tax handling complexity (VAT handling for SaaS)

Renewal or "QBR" CSAT

For B2B, ask after a renewal call or quarterly business review. This captures whether your value narrative and outcomes are landing.

This is where CSAT often predicts:

- Expansion readiness (Expansion MRR)

- Downgrade risk (Contraction MRR)

- Retention outcomes (NRR (Net Revenue Retention), GRR (Gross Revenue Retention))

The Founder's perspective

If renewal CSAT drops for accounts above our average ARPA, I assume our perceived value is deteriorating—even if support CSAT looks great. That's a roadmap and customer success priority shift, not a "coach support to be nicer" problem.

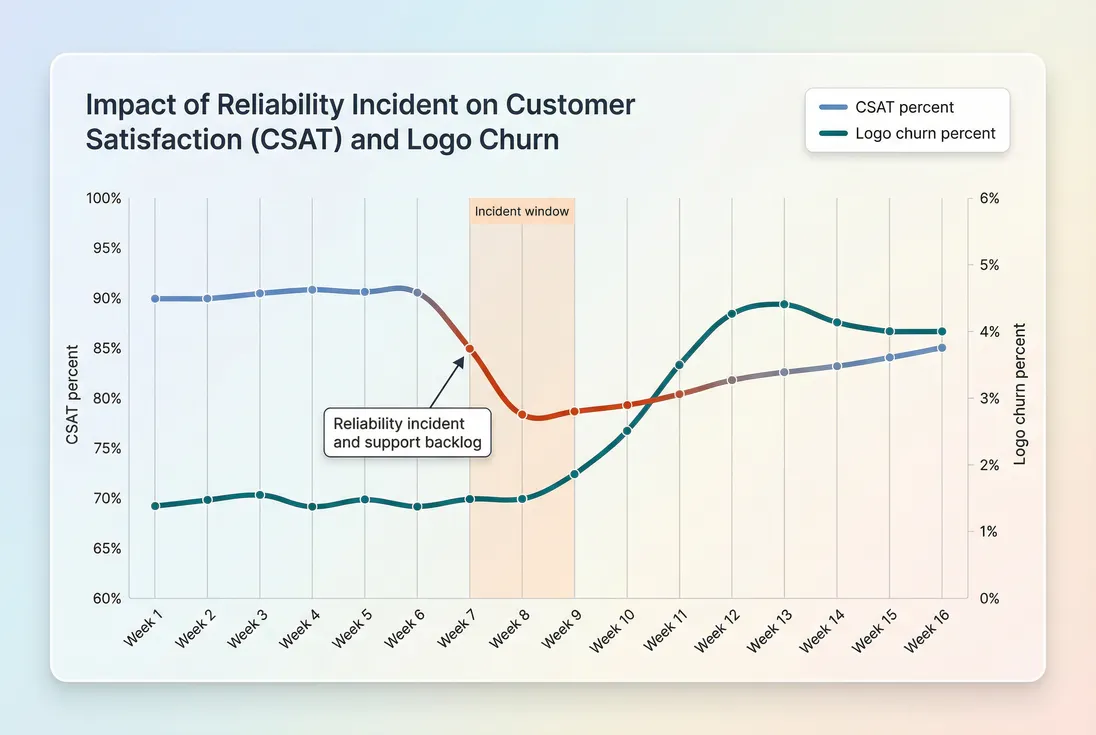

What actually moves CSAT

CSAT is driven by expectations versus experience. That's why it can change even when your product hasn't.

High-impact drivers in SaaS:

Reliability and incidents

Outages and degraded performance hit satisfaction quickly, especially for workflow-critical products. Tie CSAT trends to incident logs and Uptime and SLA events.Time-to-resolution and first response time (support)

Customers remember waiting. Even if you solve it, delays can drag CSAT.Clarity and ownership

"We're on it, here's the plan, here's when you'll hear back" often matters more than raw speed.Product friction and effort

If customers must fight the UI or do workarounds, CSAT will sag. This is where CES (Customer Effort Score) can complement CSAT: CSAT tells you how they felt; CES tells you why.Pricing and packaging surprises

Price increases, seat minimums, limits, and overages can drop CSAT even if the product improved. If you're running pricing experiments, pair CSAT with Price Elasticity thinking and watch renewal touchpoints.

How to interpret changes (without overreacting)

A CSAT number is easy to compute and easy to misread. Here's how to make it reliable.

Always interpret CSAT with volume

CSAT is a ratio. Low response counts make it swingy.

Practical rule: if a segment has fewer than ~30 responses in your chosen window, treat the CSAT as directional and rely more on:

- verbatim comments

- top issue categories

- follow-up calls

Watch for response bias

CSAT surveys are vulnerable to "who bothered to answer" bias. Common patterns:

- Only very happy or very angry customers respond

- Power users respond more than casual users

- Customers with open issues respond more than healthy accounts

Countermeasures:

- Keep the survey short (one rating + optional comment)

- Trigger it consistently at the same event

- Track response rate and non-response by segment

Use deltas, not absolutes

Instead of "Our CSAT is 88%," operate on:

- Week-over-week change

- Touchpoint-by-touchpoint gaps

- Segment gaps (SMB vs. mid-market vs. enterprise)

- Before/after of a process change

A small drop (say 2 points) can be noise unless it persists and appears across multiple segments.

When CSAT breaks as a metric

CSAT becomes misleading when it's treated as a single company-wide KPI. Typical failure modes:

You measure the wrong moment

If you only survey after ticket closure, you'll improve ticket CSAT—but you might miss that customers are unhappy about onboarding, billing, or missing features.

Fix: measure multiple touchpoints (even if each has low volume), and review them separately.

Your definition of "satisfied" is inconsistent

If some teams treat 3 as satisfied and others treat only 4–5, your CSAT is not comparable.

Fix: standardize the threshold and document it.

You optimize for score, not outcomes

Teams can "game" CSAT by:

- nudging customers to give high scores

- only sending surveys when they expect a good response

- avoiding difficult but necessary policies

Fix: audit sampling, and connect CSAT to outcomes like retention and expansion.

The Founder's perspective

If CSAT is climbing but Churn Reason Analysis still shows "missing functionality" and "not enough value," my CSAT program is telling me about politeness, not product-market fit. I'd rather know the hard truth early.

How CSAT connects to churn and retention

CSAT is usually a leading indicator, but the lead time depends on the touchpoint:

- Support CSAT can predict churn for SMB faster (weeks)

- Renewal CSAT predicts churn for annual contracts slower (months)

- Billing CSAT can predict involuntary churn immediately (days to weeks)

The right way to link CSAT to revenue outcomes is segmentation and lag analysis:

- Segment CSAT by customer type (plan, tenure, industry, contract size)

- Track future outcomes for those cohorts:

- churn (Customer Churn Rate, Logo Churn)

- contraction (Contraction MRR)

- expansion (Expansion MRR)

- Look for patterns like "CSAT below 80% at renewal check-in → churn within 60 days"

A practical interpretation pattern

- CSAT drops in one touchpoint only (for example billing): treat it like a localized operational issue. Assign an owner and fix the process.

- CSAT drops across multiple touchpoints: suspect a broader expectation/value problem (product gaps, pricing changes, reliability).

- CSAT drops for a specific segment (for example enterprise): check whether your product and support model matches that segment's requirements.

To validate whether CSAT is predictive for you, build a simple view:

- Customers with last-30-day CSAT below threshold

- Their next-60-day churn / contraction rate versus others

If the gap is meaningful, CSAT becomes a targeting tool for save plays and success outreach.

How founders use CSAT to make decisions

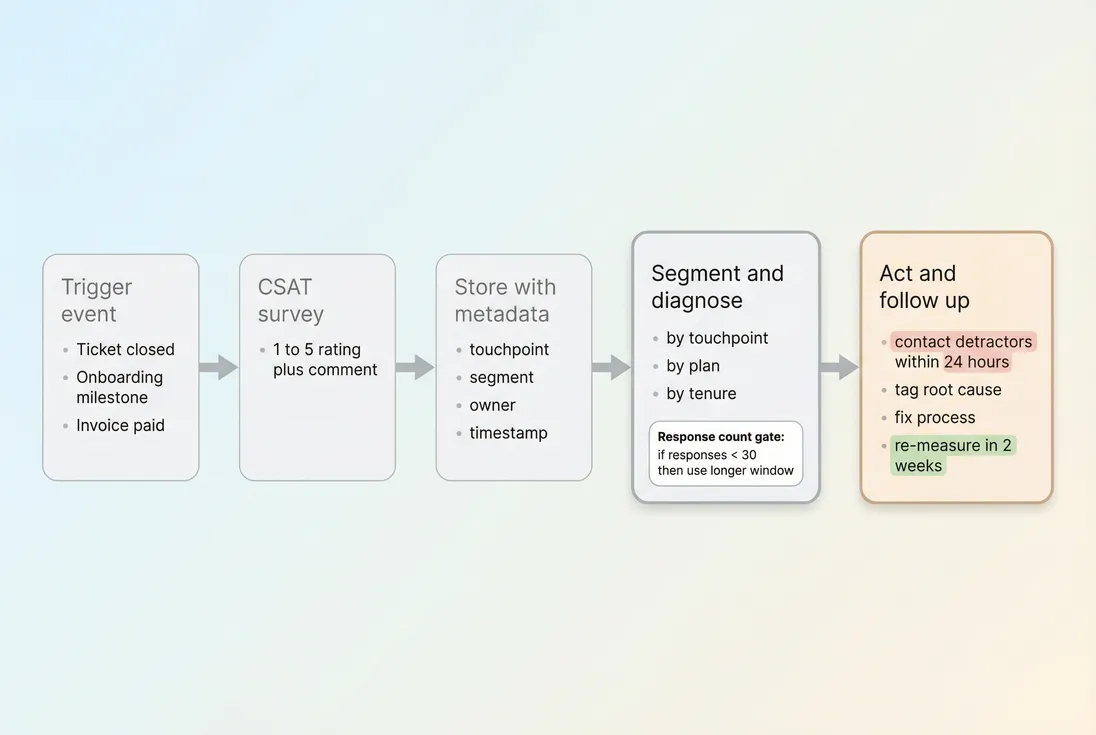

CSAT is most valuable when it closes the loop from signal → diagnosis → action.

Decision 1: Where to invest (support vs. product)

If support CSAT is high but renewal CSAT is slipping, you likely need to invest in:

- product outcomes and ROI narrative

- customer education and enablement

- roadmap items tied to customer jobs

If support CSAT is slipping but product CSAT is stable, invest in:

- staffing levels and coverage

- triage and escalation

- tooling and playbooks

Decision 2: Which customers need intervention

CSAT is an efficient way to prioritize outreach—especially when paired with a broader Customer Health Score.

Use low CSAT to trigger:

- same-day follow-up for detractors

- executive outreach for high-value accounts

- root cause tagging (incident, bug, missing feature, confusion)

Decision 3: Whether a change actually helped

When you ship an onboarding redesign, change pricing, or revise support routing, CSAT can confirm impact faster than waiting for retention.

Just make sure you compare like-for-like:

- same touchpoint trigger

- same segment mix

- similar response volume

Implementation checklist that avoids common mistakes

Here's a founder-friendly way to stand up CSAT without turning it into noise.

Survey design

- Ask one rating question tied to a specific moment

- Add one optional open text question: "What could we do better?"

- Keep language consistent (avoid "love" or "delight"; ask about satisfaction)

Data fields to store with every response

Minimum metadata:

- touchpoint (support, onboarding, billing, renewal)

- customer segment (plan, MRR band, tenure)

- channel (email, in-app, chat)

- timestamp

- owner/team (support pod, CSM)

This is what makes CSAT actionable instead of just reportable.

Operating cadence

- Weekly review: trends by touchpoint + top themes from comments

- Monthly review: segment analysis + correlation to churn/expansion

- Quarterly review: revisit where you measure and whether the program predicts retention

CSAT benchmarks (useful, not misleading)

There is no universal "good CSAT," because it depends on touchpoint, customer expectations, and scale. Still, founders need a starting point.

Use these as rough reference ranges for SaaS teams with consistent sampling:

| Touchpoint | Rough range many teams see | Notes |

|---|---|---|

| Support ticket CSAT | 85%–95% | High variance by complexity; enterprise issues can score lower even with good work. |

| Onboarding CSAT | 80%–90% | Drops often indicate unclear setup steps or longer-than-expected time to value. |

| Billing CSAT | 75%–90% | Sensitive to payment failures and proration confusion; small issues cause outsized dissatisfaction. |

| Renewal/QBR CSAT | 75%–90% | Reflects perceived ROI and relationship health; segment by contract size. |

Better than chasing an external benchmark: establish your baseline by touchpoint and segment, then set a goal like "raise billing CSAT from 74% to 82% in 60 days."

CSAT vs. NPS: how to use both

If you're already tracking NPS (Net Promoter Score), don't replace it with CSAT. Use them differently:

- CSAT: operational quality at specific moments (fast feedback, fast fixes)

- NPS: loyalty and advocacy (slower-moving, strategy signal)

A healthy pattern for many SaaS companies:

- CSAT is stable and high in critical touchpoints

- NPS trends upward as product value compounds and positioning sharpens

If CSAT is high but NPS is low, customers may be satisfied with interactions but not excited about outcomes or differentiation.

The bottom line

CSAT is a simple metric with real leverage—if you treat it as a segmented, event-based signal tied to actions. Track it by touchpoint, always show response volume, and connect it to retention outcomes like Logo Churn and NRR (Net Revenue Retention). Done right, CSAT becomes an early-warning system you can actually operate from.

Frequently asked questions

CSAT measures satisfaction with a specific moment (a ticket, onboarding, renewal). NPS (Net Promoter Score) focuses on long-term loyalty and word-of-mouth. CES (Customer Effort Score) measures how hard it was to get something done. Use CSAT for operational fixes, NPS for brand momentum, and CES for friction reduction.

Benchmarks depend on where you measure. Support-ticket CSAT often lands in the mid 80s to mid 90s when teams are staffed and consistent. Product or relationship CSAT tends to run lower and vary by segment. Treat your own trailing 8 to 12 weeks baseline as the real benchmark, then improve it.

The most common reason is you are surveying the wrong moment or the wrong customers. Support CSAT can improve while product value or ROI declines. Segment CSAT by plan, tenure, and touchpoint, then compare it to retention metrics like Logo Churn and Net MRR Churn Rate. Look for mismatches and lag effects.

Avoid overreacting to small samples. As a rule, fewer than 30 responses per segment per time window is volatile and easily biased by a few outliers. Always show CSAT alongside response counts and response rate. If volume is low, review verbatim feedback and trends over longer windows.

First, confirm it is real: check response volume, survey timing, and whether a single customer skewed results. Then localize the drop by touchpoint, team, and segment. Correlate with operational signals like response times, uptime incidents, or billing failures. Fix the root cause, then follow up with detractors and re-measure.