Table of contents

Active users (DAU/WAU/MAU)

Active users is one of the earliest "truth signals" you get about retention risk. Revenue often lags reality: customers can stay subscribed for weeks while usage quietly collapses. If you watch active users well, you catch churn and contraction earlier—and you can usually fix the underlying product or onboarding issue before it hits MRR (Monthly Recurring Revenue).

Definition (plain English): Active users are the unique users who performed a defined meaningful action in your product during a time window—daily (DAU), weekly (WAU), or monthly (MAU).

What counts as "active" in practice

The hardest part of DAU/WAU/MAU isn't the math—it's defining an activity event that reflects value, not noise.

Choose a "core value action"

Good "active" definitions usually meet these criteria:

- Value-aligned: The user did something that correlates with retention or expansion (not just browsing).

- Repeatable: It can happen multiple times over a customer lifecycle.

- Comparable over time: It doesn't change meaning every time you redesign navigation.

Examples by product type:

| SaaS type | Bad active definition | Better active definition |

|---|---|---|

| B2B reporting | Login | Created or scheduled a report |

| Dev tool | Pageview | Ran a build, deployed, or pushed an integration run |

| CRM | Session started | Logged an activity, updated pipeline stage, sent email |

| Accounting | Viewed dashboard | Created invoice, reconciled transaction, closed books task |

If you're not sure what correlates with retention, start with a shortlist of candidate actions and validate using Cohort Analysis: users who do the action in their first week should retain better than those who don't.

Separate "active users" from "active customers"

In B2B, one paying account might have 2 users or 200. That's why it's common to track both:

- Active users: unique end users doing value actions.

- Active accounts/customers: unique paying entities with at least one active user.

This distinction matters whenever pricing depends on seats, adoption inside teams, or champion risk. (See Active Customer Count.)

The Founder's perspective

If active accounts are stable but active users per account is falling, you don't have a pure churn problem—you have an adoption problem. That changes the playbook: less "save the account," more "fix onboarding, permissions, and internal sharing."

Be explicit about edge cases

Your metric will be more trustworthy if you specify these upfront:

- Identity: how you dedupe users across devices, emails, SSO, and invites.

- Time zone: consistent definition (e.g., UTC) to avoid "day" shifting.

- Bots and internal users: exclude test accounts, employees, monitoring.

- Read-only roles: decide whether "viewer" activity should count.

How DAU, WAU, and MAU are calculated

At its core, each metric is a distinct count over a time window.

A clean way to express it:

Pick "rolling" vs "calendar" windows

This is a common source of confusion:

- Rolling WAU (last 7 days): best for monitoring and dashboards; smoother; fewer calendar artifacts.

- Calendar WAU (Mon–Sun): useful for weekly business reviews and planning, but more "jumpy."

- Rolling MAU (last 28/30 days): best for trend detection.

- Calendar MAU (month-to-date): useful for reporting, but hard to compare mid-month.

My practical rule: use rolling windows for product decisions, and calendar windows for financial or executive cadence—just don't mix them without labeling clearly.

Avoid double-counting across events

If "active" can be triggered by multiple events (e.g., created report OR exported data), your query should still count unique users once.

Also watch for "event storms" (one action emitting multiple events). Instrumentation bugs can inflate actives overnight.

What this metric actually reveals

Founders often ask, "Is DAU/WAU/MAU a growth metric or a retention metric?" It's both—but only when you interpret it in context.

1) Demand vs adoption

Active users rise when either of these improves:

- More users enter the product (acquisition, virality, invites, SSO rollouts).

- More users successfully adopt (activation, habit formation, feature usability).

If signups jump but MAU barely moves, you likely have an activation bottleneck. Pair active user trends with leading activation indicators like Onboarding Completion Rate, Product activation and deeper usage measures like Feature Adoption Rate.

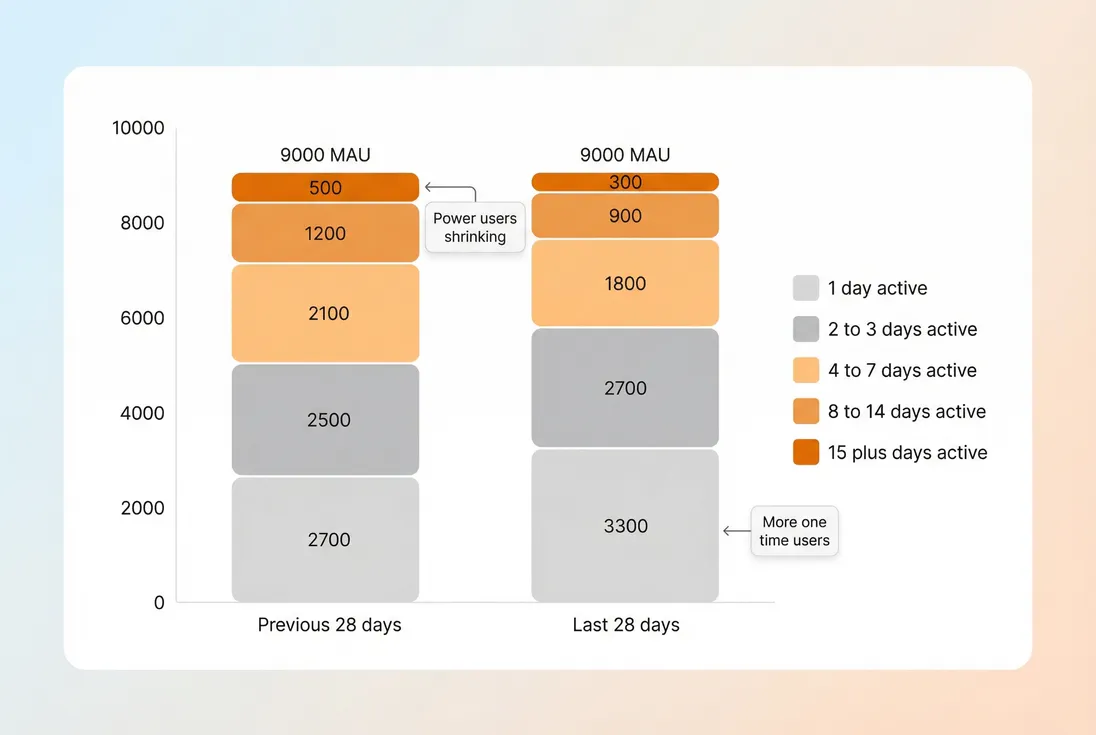

2) Engagement depth (not just reach)

MAU can stay flat while engagement quality deteriorates. One of the most useful companion views is a frequency distribution: "How many days were users active in the last 28 days?"

This "depth" view is where you see risk early: power users (high-frequency) often drive internal advocacy, seat expansion, and renewal confidence.

3) Stickiness and product cadence fit

If your product is naturally used daily, DAU matters a lot. If it's weekly, DAU will look "low" even when retention is great.

That's why ratios like DAU/MAU exist—to normalize for window size and get a stickiness signal. (See DAU/MAU Ratio (Stickiness).)

The Founder's perspective

Don't punish your product for having a weekly job-to-be-done. Choose the cadence your customers actually need, then optimize for "reliable return" on that cadence.

When active users mislead you

DAU/WAU/MAU is easy to misuse. Here are the failure modes that create bad decisions.

"Active" is too top-of-funnel

Counting "visited dashboard" inflates actives, especially if you email customers to click a link. That can make you think retention improved when you just improved clickthrough.

Fix: define activity as a downstream value action and keep it stable.

Measurement changed

Common causes:

- event names changed in tracking

- identity merge/unmerge issues (SSO migration is a big one)

- mobile vs web dedupe changed

- internal QA started hitting production

Fix: maintain a small "analytics changelog" and annotate graphs when you ship tracking changes.

Account structure hides the real story

For multi-seat B2B, active users can fall because a single large account rolled off or reduced usage. That's not a product-wide engagement issue; it's concentration.

Fix: segment by account size or plan tier, and pair with revenue health metrics like Customer Concentration Risk.

Seasonality and work patterns

B2B products often drop:

- weekends (obvious)

- end-of-year holidays

- end-of-quarter crunches (some categories spike instead)

Fix: compare against the same day-of-week and use rolling windows. If you're doing deep analysis, use cohorts (next section).

How founders use active users to make decisions

Treat DAU/WAU/MAU less like a score and more like a diagnostic instrument. Founders use it to answer a few recurring operational questions.

Are new users activating fast enough?

If signups increase but actives don't, you're leaking value early.

A practical workflow:

- Track signups (or new invited users) alongside WAU.

- Create a "new user active within 7 days" view.

- Break it down by acquisition channel, segment, or onboarding path.

This is where Time to Value (TTV) and Onboarding Completion Rate become highly actionable: shorten the path from "account created" to the core action that qualifies a user as active.

The Founder's perspective

If WAU from new users falls, assume you have an onboarding or positioning problem until proven otherwise. You can't spend your way out of an activation leak—CAC just gets more expensive. (See CAC (Customer Acquisition Cost).)

Is usage decay predicting churn?

Usage declines often precede:

- downgrades (contraction)

- non-renewal risk

- champion churn inside the account

Two practical ways to connect usage to retention:

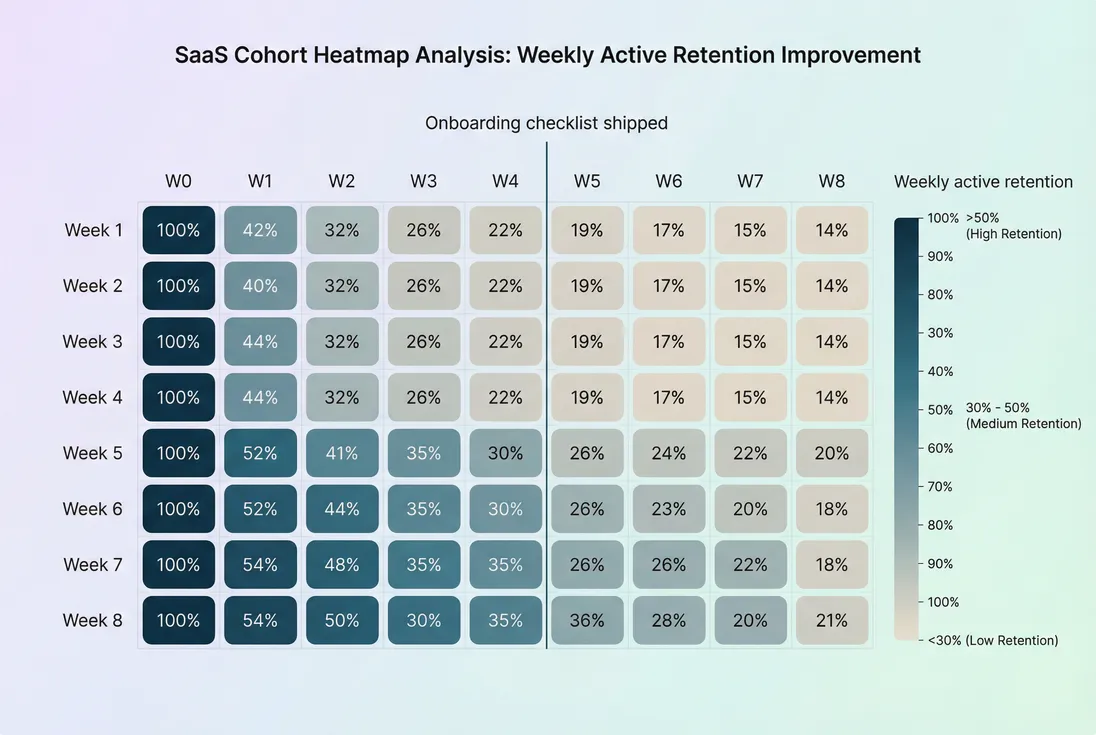

- Cohort-based active retention: "Of users who became active in week 1, what percent are active in week 4, week 8…?"

- Account-level "silence detection": "Accounts with zero actives for 14 days" (custom to your cycle).

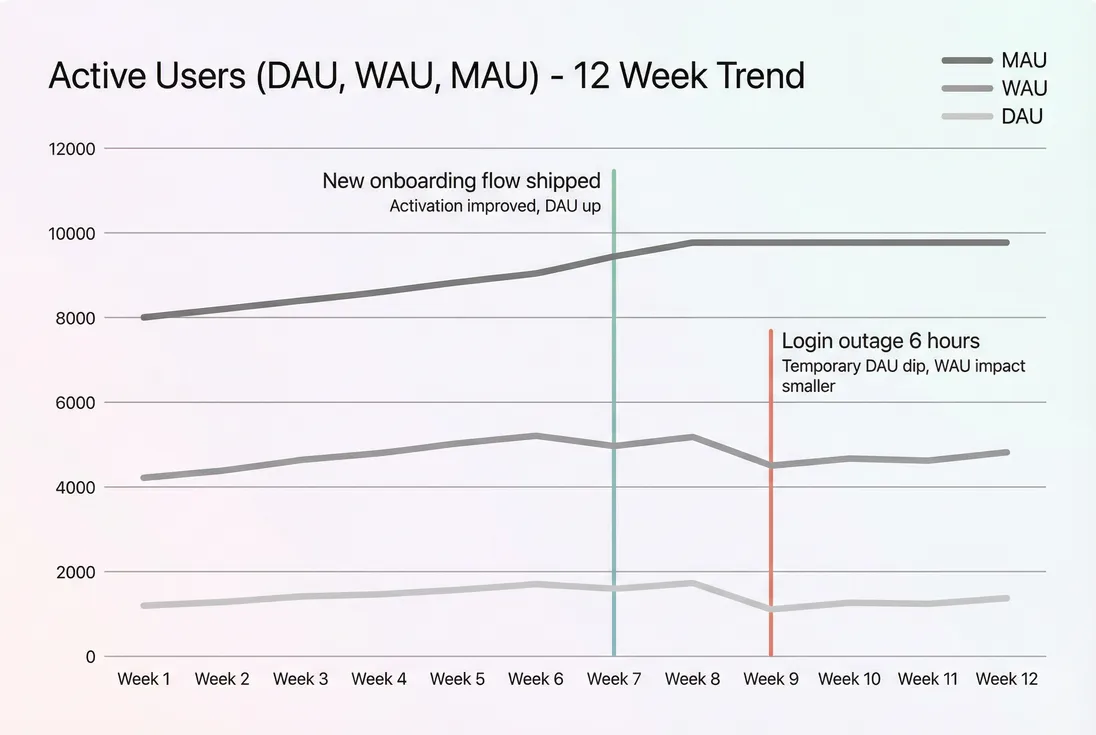

Here's what cohort active retention looks like when onboarding improves:

This is the moment to pair usage with churn and retention metrics:

- If active retention improves, you should eventually see better Logo Churn and NRR (Net Revenue Retention).

- If active retention worsens while revenue is flat, expect future pressure on GRR (Gross Revenue Retention).

Should we optimize product or go-to-market?

Active users helps you decide whether your next constraint is:

- Distribution constraint (not enough new users entering)

- Activation constraint (new users enter but don't stick)

- Engagement constraint (users activate but don't form habits)

A simple diagnostic table:

| Symptom | Likely constraint | What to do next |

|---|---|---|

| Signups up, MAU flat | activation | improve onboarding, reduce setup steps, clarify first success |

| MAU up, DAU/WAU flat | shallow engagement | improve recurring workflows, notifications, templates, integrations |

| DAU down after release | workflow friction | rollback, fix UX, measure task completion time |

| Actives stable, MRR down | monetization/pricing mix | review packaging, discounts, expansion paths (see ASP (Average Selling Price)) |

The Founder's perspective

Active users doesn't tell you "do more marketing" or "build more features." It tells you where the system is leaking: acquisition, activation, or habit. That focus prevents random roadmap churn.

How to interpret changes without overreacting

A founder's job is to respond quickly—but not noisily. Here's how to read changes responsibly.

Look at the right comparison

- Day-over-day DAU is often meaningless (day-of-week effect).

- Prefer week-over-week for DAU and WAU.

- Prefer month-over-month for MAU (or rolling 28-day comparisons).

Segment before concluding

If overall WAU drops 8%, segment it:

- new vs existing users

- small vs large accounts

- plan tiers

- key feature users vs non-users

- geography or industry (if relevant)

A drop isolated to new users points to onboarding or traffic quality. A drop isolated to large accounts points to churn risk and customer success.

Tie usage to outcomes

Usage is not the business by itself. It becomes powerful when connected to outcomes:

- Higher active retention → better renewal probability

- Higher depth of usage → higher expansion likelihood

- Lower activity → higher support burden and dissatisfaction risk

If you have the data, validate relationships: users active at least X days in 28 tend to renew at Y%. If you don't have the data yet, start by measuring and building that linkage over time.

Practical benchmarks (and how to use them)

Benchmarks are category-dependent. Still, these ranges are often directionally useful:

- Daily workflow B2B: DAU/MAU commonly ~15–35%

- Weekly workflow B2B: focus on WAU/MAU; DAU/MAU may look "low"

- Self-serve B2C: DAU/MAU can be 30%+ if habit-forming

- Low-frequency compliance or finance: MAU can be meaningful while DAU stays very low

Use benchmarks to catch definition errors (e.g., DAU/MAU of 90% is suspicious for most B2B). Use your own cohort trend as the real standard.

For turning usage into a business narrative, pair it with:

A founder-grade checklist for getting DAU/WAU/MAU right

- Write down your active definition (event + constraints + exclusions).

- Pick a primary cadence (DAU vs WAU vs MAU) based on job-to-be-done.

- Standardize windows (rolling vs calendar) and label dashboards clearly.

- Track depth, not just reach (active-days distribution).

- Add cohort active retention to separate acquisition from engagement.

- Annotate tracking changes so you don't "debug the business" when it's just instrumentation.

If you do only one thing: make "active" mean real value, then watch cohorts. That's the difference between a vanity MAU chart and a metric that drives product, retention, and revenue decisions.

Frequently asked questions

An active user should be someone who completes a meaningful value action in your product within the time window. For example, sending an invoice, creating a report, or deploying code. Avoid counting logins or pageviews unless that truly represents value. Consistency matters more than perfection.

Match the window to your product's natural usage cadence. Daily-workflow products care about DAU and DAU/MAU. Weekly planning tools often prioritize WAU. Monthly-close or compliance products may rely on MAU plus task completion rates. Pick one primary view, then use the others to diagnose changes.

Benchmarks vary widely by category, but many B2B tools land around 10 to 30 percent DAU/MAU, with higher values for products embedded in daily workflows. Treat benchmarks as sanity checks, not goals. The best reference is your own trend by segment and cohort over time.

Not necessarily. Revenue can grow from expansion, pricing, or larger customers while user counts remain flat. The risk is concentration: fewer users carrying more revenue can increase churn impact. Compare active users by plan, account size, and retained cohorts, and pair with [NRR (Net Revenue Retention)](/academy/nrr/) and [ARPA (Average Revenue Per Account)](/academy/arpa/).

The most common causes are measurement changes, friction introduced in a core workflow, or a shift in user mix. First verify instrumentation and identity logic. Then segment by new vs existing users, plan, and key features. Use cohort-based active retention to see whether the drop is onboarding or ongoing engagement.