Table of contents

Free trial

Free trials aren't "top-of-funnel." They're a controlled experiment on whether your product can deliver value fast enough to justify paying—before your cash burn forces you to guess.

A free trial is time-limited access to your product at no cost, designed to let a prospective customer experience meaningful value and then convert to a paid subscription (self-serve or sales-assisted).

What a free trial reveals

A free trial is a microscope on three things founders routinely misdiagnose:

- Time-to-value reality (not what your team believes it is).

- Product-market fit strength (users who hit value still don't pay vs users never hit value).

- Go-to-market efficiency (how much "help" it takes to convert and retain).

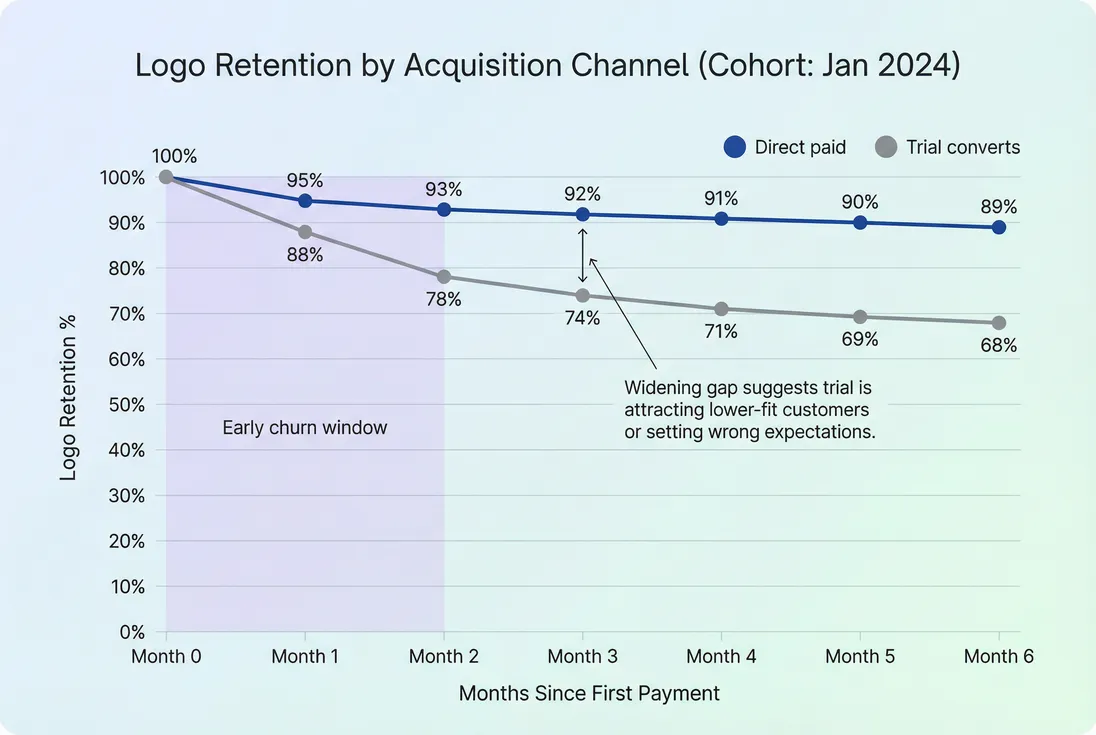

If you only track "trial conversion," you'll miss the most common failure mode: conversion improves while retention worsens. That is usually a sign of pulling forward the wrong buyers, not real progress.

The Founder's perspective: Treat the trial as a revenue quality gate. If you "optimize conversion" by nudging everyone into paying, you may increase short-term MRR (Monthly Recurring Revenue) while quietly increasing Logo Churn 30–90 days later.

How trial performance is calculated

You'll get better decisions by treating the trial like a funnel with clear definitions, not a vague period of free access.

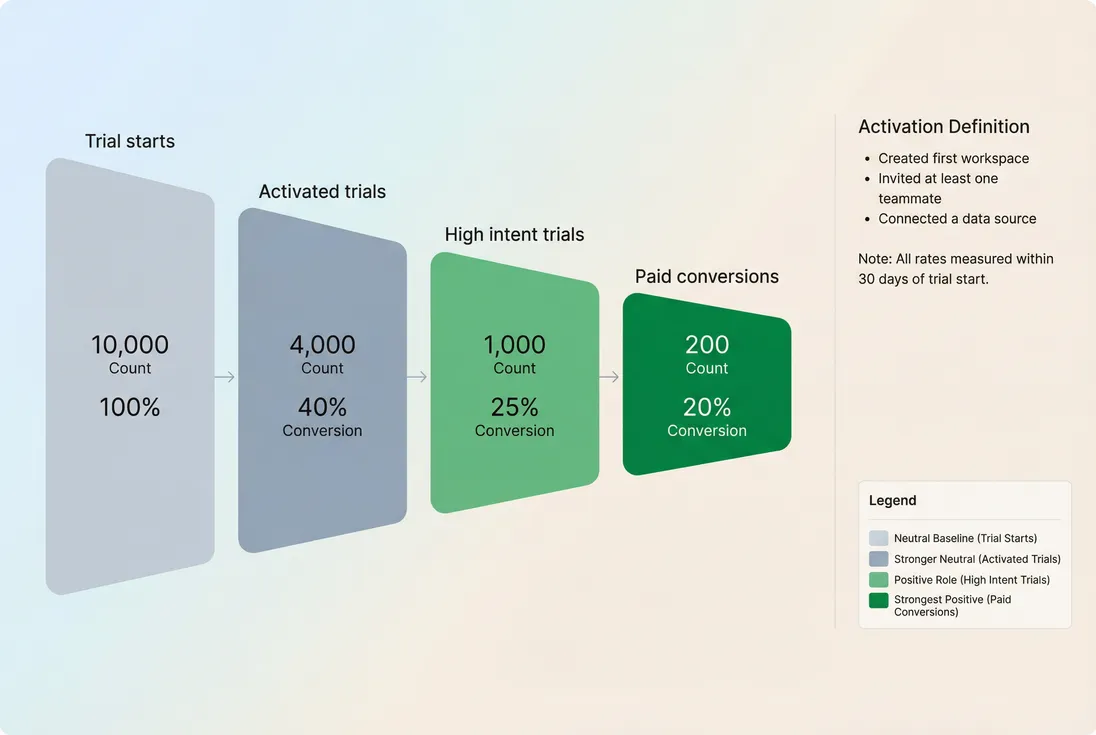

The core trial funnel

At minimum, define and track:

- Trial starts: new trial accounts created (not visits).

- Activation: the smallest set of actions that reliably predicts paid retention.

- Trial-to-paid conversion: trial accounts that become paying customers.

- Time to convert: days from trial start to first payment (or contract signature).

Here are the two calculations that matter most:

The practical interpretation:

- If activation rate is low, you have an onboarding/time-to-value problem.

- If activation rate is high but conversion is low, you have a packaging/pricing/trust problem (or the trial gives away too much).

- If conversion is high but early retention is low, your trial is attracting the wrong users or setting the wrong expectations.

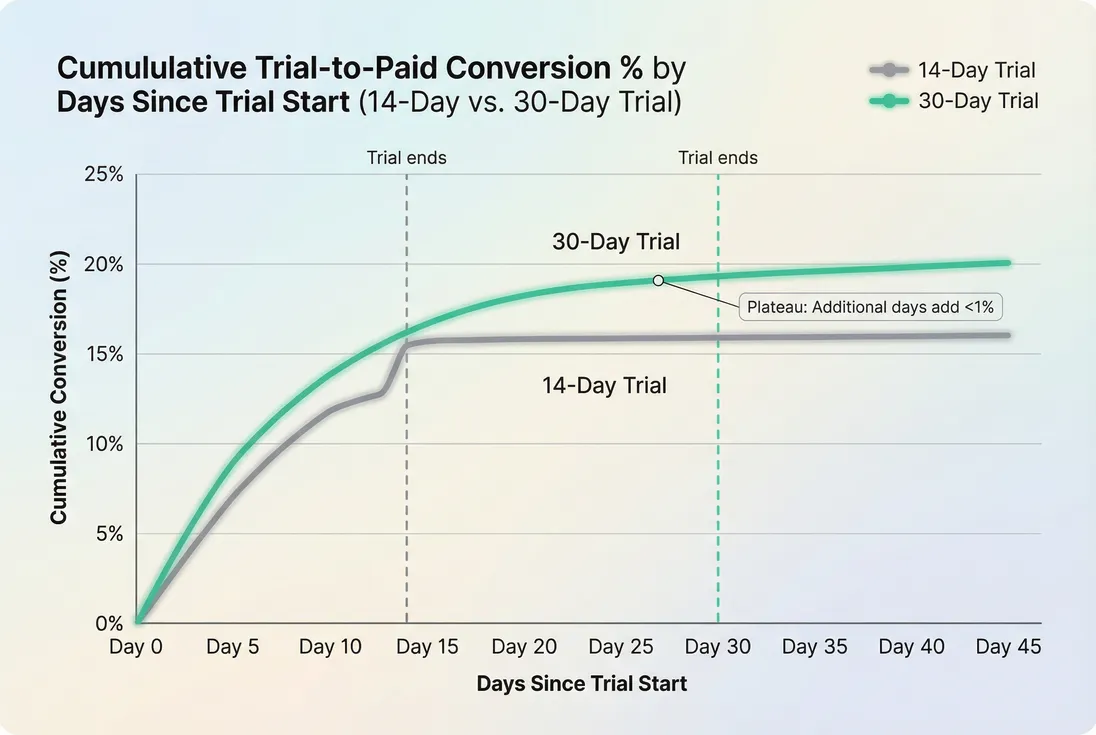

Choose an attribution window (or your data will lie)

Trials convert over time, and changes to trial length or follow-up can shift when conversions happen. Pick a consistent window and stick to it:

- Common: conversion within 14, 30, or 45 days of trial start

- Sales-assisted: measure within 60–90 days if contracting is real

Cohorting by trial start date is critical. It's the same logic you use in Cohort Analysis: you want stable cohorts so you can compare like with like (not "whatever happened to pay this month").

If you use GrowPanel, this is where cohorts and filters matter most: segment trial cohorts by acquisition channel, plan, geography, or sales-assist versus self-serve, then compare downstream retention and monetization. GrowPanel's trial insights show trial-to-paid conversion, activation rates, and days-to-conversion with full segmentation. See the docs for cohorts and filters.

Add one "economic" metric: revenue per trial start

Conversion rate alone ignores pricing mix and expansion. A simple, founder-friendly rollup is:

This helps answer: "Are we creating more revenue per trial, or just more paid logos at a lower ARPA (Average Revenue Per Account)?"

What moves conversion up or down

Most trial outcomes are driven by a few controllable levers. The mistake is pulling one lever (like requiring a credit card) without understanding what part of the funnel you're trying to fix.

Trial length and urgency

Longer trials usually increase activation (more time) but can decrease urgency (less pressure to decide). Shorter trials force focus but can under-serve complex onboarding.

A practical way to decide: set trial length to time-to-value + buffer:

- If your Time to Value (TTV) is minutes or hours: 7–14 days

- If it requires setup and collaboration: 14–30 days

- If it requires procurement/security review: a "trial" may really be a pilot (different motion, different KPIs)

For more on choosing the right trial length, see How many days should a SaaS trial be? and Designing the perfect SaaS trial.

Credit card required vs not required

This is not a moral choice; it's a qualification mechanism.

Card required tends to:

- reduce trial starts

- increase intent

- increase conversion rate

- increase support load per trial (more serious users ask more)

No card tends to:

- increase trial starts (including low intent)

- put more burden on activation and follow-up to create intent

The correct choice depends on whether your bottleneck is quality or volume.

The Founder's perspective: If sales can't handle the number of trials, don't celebrate "more signups." Either qualify harder (card, firmographic gating, scheduling) or reduce the number of low-intent trial starts so your team spends time where conversion and retention are likely.

Activation definition (the hidden superpower)

Activation is where most teams win or lose. A good activation definition is:

- behavior-based (actions taken, not pages viewed)

- early (achievable quickly)

- predictive (correlates with retention and expansion)

Examples:

- Collaboration product: "created workspace + invited teammate"

- Data product: "connected data source + ran first report"

- Developer tool: "installed SDK + first successful API call"

Once activation is defined, you can work backwards to improve it with:

- onboarding sequence changes

- templates/sample data

- better defaults

- clearer first-run experience

- lifecycle emails and in-app prompts

If you need a companion metric, track Onboarding Completion Rate as the operational proxy for whether your onboarding is working.

Follow-up motion (self-serve vs sales-assisted)

Two companies can have the same trial conversion rate and completely different economics:

- Self-serve: low CAC, lower ACV, relies on product and lifecycle

- Sales-assisted: higher CAC, higher ACV, relies on human follow-up

This ties directly into CAC (Customer Acquisition Cost) and CAC Payback Period. If sales-assisted trial conversion improves but payback gets worse, you might be adding labor that doesn't scale.

Benchmarks (use with caution)

Benchmarks are only useful when you match your motion and intent level. Here's a pragmatic starting point:

| Motion | Typical trial-to-paid conversion (from trial starts) | What to optimize first |

|---|---|---|

| Self-serve PLG, no credit card | 3–10% | Activation and TTV |

| Self-serve PLG, credit card | 8–20% | Pricing clarity and first value |

| Sales-assisted trials (qualified) | 20–50% | Pipeline quality and sales cycle |

If your conversion is below these ranges, the fix is rarely "more nurture." It's usually:

- the wrong people entering the trial, or

- the right people not reaching value.

When free trials break

A free trial program breaks in predictable ways. Founders should recognize the pattern early, because the symptoms often look like growth.

Pattern 1: Signups rise, revenue doesn't

Likely causes

- no qualification (trial is too easy to start)

- broad targeting, unclear ICP

- product is "interesting" but not necessary

- the trial is being used for free outcomes (students, competitors, one-off use)

What to do

- add lightweight qualification (role, company size, use case)

- tighten the trial's feature set (keep the "aha," gate the ongoing value)

- prioritize acquisition sources with higher activation (use segmented cohorts)

Pattern 2: Conversion rises, early churn rises

Likely causes

- aggressive upgrade prompts before value

- sales closing users who aren't a fit

- discounts masking weak value perception (see Discounts in SaaS)

- onboarding over-promises or hides complexity

What to do

- compare retention curves for trial converts vs direct-paid

- audit your activation definition (is it too shallow?)

- set expectations in-product before the paywall

This is where retention metrics become the truth serum: review Retention, GRR (Gross Revenue Retention), and NRR (Net Revenue Retention) for trial cohorts.

Pattern 3: Trials consume support capacity

This is common when the product requires setup help or data migration.

What to do

- separate "trial" from "guided evaluation" in your process

- restrict guided help to qualified trials (size, role, intent)

- instrument "support touches per trial" and relate it to conversion and first-year value

If a trial requires heavy support, evaluate it like a sales motion: win rate, sales cycle, and payback (see Win Rate and Sales Cycle Length).

How founders use trial data

The best use of trial analytics is not reporting. It's decision-making: where to invest product and GTM effort next.

Decide what to fix with a simple diagnosis

Use this decision grid:

- Low activation + low conversion

- fix: onboarding, TTV, setup friction, messaging mismatch

- High activation + low conversion

- fix: pricing/packaging clarity, trust, paywall placement, procurement blockers

- Low activation + high conversion

- rare; often indicates measurement error or sales overrides

- High activation + high conversion

- scale acquisition cautiously, then focus on retention and expansion

To connect trial performance to durable growth, follow conversion with:

- ARPA (Average Revenue Per Account) of trial converts

- early Customer Churn Rate and MRR Churn Rate

- expansion signals (see Expansion MRR)

Run better experiments (and avoid false wins)

Common trial experiments:

- change trial length

- require credit card

- change activation onboarding steps

- gate or ungate key feature

- add a "success milestone" checklist

- add sales-assisted outreach for a specific segment

Rules to keep experiments honest:

- Cohort by trial start date, not conversion date.

- Track both conversion and 90-day retention (at least).

- Watch ARPA and plan mix; conversion can rise by pushing low tiers.

- Keep acquisition mix stable or segment by channel.

Tie trials back to capital efficiency

Trials are an acquisition strategy, so they ultimately need to support efficient growth. Relate trial cohorts to:

- CAC (Customer Acquisition Cost)

- LTV (Customer Lifetime Value)

- LTV:CAC Ratio

- Burn Multiple if you're scaling spend

If trial improvements increase conversion but reduce LTV (because customers churn earlier), your LTV:CAC can actually get worse. That's why "trial conversion rate" is never a standalone KPI.

Practical setup checklist

Before you "optimize" your free trial, ensure your measurement is trustworthy:

- Define trial start (account created? workspace created? email verified?)

- Define activation (behavioral, predictive, achievable)

- Define conversion (first payment captured, contract signed, invoice paid)

- Pick an attribution window (e.g., 30 days from trial start)

- Cohort and segment by:

- acquisition channel

- persona / company size

- sales-assisted vs self-serve

- plan chosen at conversion

- Compare downstream quality: retention, churn, ARPA, expansion

If you do this well, "free trial" stops being a feature of your pricing page and becomes what it should be: a repeatable system for turning product value into durable recurring revenue.

Frequently asked questions

It depends on motion and qualification. For self-serve PLG, many teams see 5 to 15 percent of trial starts convert to paid, with higher rates when activation is strong. For sales-assisted trials, conversion can be 20 to 50 percent because qualification happens earlier.

Credit card required usually reduces trial starts but increases buyer intent and can raise trial-to-paid conversion. It is helpful when your ICP is clear and onboarding is quick. Avoid it when you are still exploring positioning or when your product needs proof before commitment.

Set trial length to cover time-to-value plus a buffer. If users can reach value in one session, 7 to 14 days is often enough. If setup takes coordination, 14 to 30 days is common. Longer trials are not automatically better; they can delay decisions and reduce urgency.

Compare retention and expansion for customers who came from trials versus customers who bought without a trial. If trial converts have meaningfully worse early retention, your trial may over-promise, attract low-intent users, or allow people to get value without paying. Tighten activation and qualification.

Cohort by trial start date, then measure conversion within a fixed window (for example 30 days) and track downstream metrics like ARPA and retention over 90 to 180 days. This prevents end-of-month noise and shows whether trial improvements create durable revenue, not just faster upgrades.